Data Structures for Muggles

-min_7cec81cba2ba1adb3e5c3bedbe62b9f9.jpg)

Data Structures for Muggles

CX330Prologue

All the following example will be shown in C Programming Language or pseudo code.

This is the note when I was taking the course in NCKU, 2024. Blablabla…

Finally, I would like to declare that almost every photo I use comes from the handouts of my course at NCKU, provided by the professor. If any photo comes from another source, I will give proper credit in the caption or description of the image.

Complexity

Space Complexity

- The amount of memory that it needs to run to completion.

- Total space requirement = Fixed space requirement + Variable space requirements

- We usually care more about the Variable space requirements.

For example, we have the following code like this.

Iterative

float sum(float list[], int n)

{

float tempsum=0;

int i;

for (i = 0; i < n; i++){

tempsum += list[i];

}

return tempsum;

}In this code, we DO NOT copy the array list, we just passes all parameters by value, so the variable space requirements . Here’s another example, let’s look at the code first.

Recursive

float rsum(float list[], int n)

{

if (n) return rsum(list,n-1)+list[n-1];

return 0;

}In this case, 1 recursive call requires K bytes for 2 parameters and the return address. If the initial length of list[] is N, the variable space requirements bytes.

Time Complexity

- The amount of computer time that it needs to run to completion.

- Total time requirement = Compile time + Execution time

- We usually care more about the Execution time.

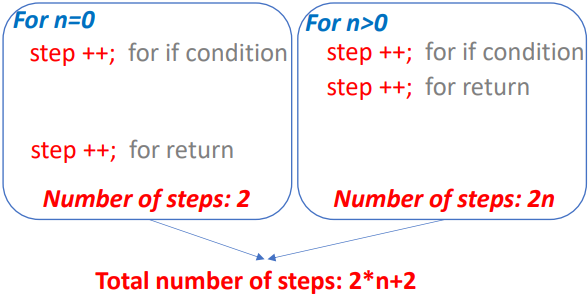

For example, this is a segment with execution time independent from the instance characteristics.

float sum(float list[], int n)

{

float tempsum=0;

int i;

for (i = 0; i < n; i++){

tempsum += list[i];

}

return tempsum;

}On the other hand, a segment with execution time dependent on the instance characteristics will be like this.

float rsum(float list[], int n)

{

if (n){

return rsum(list,n-1)+list[n-1];

}

return 0;

}For given parameters, computing time might not be the same. So, we should know the following cases.

- Best-case count: Minimum number of steps that can be executed.

- Worst-case count: Maximum number of steps that can be executed.

- Average count: Average number of steps executed.

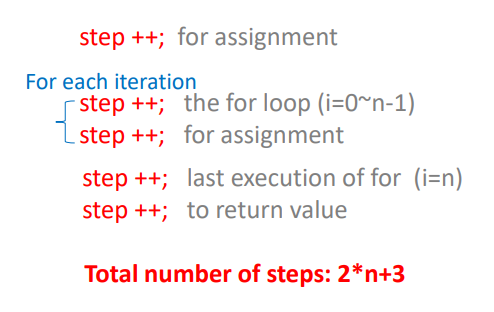

Here, I’m going to use insertion sort to show you the difference between the best-case & the worst-case. If you don’t know what it is, you can go check it out here. Following is a snippet of the insertion sort algorithm in C.

for (j = i - 1; j >= 0 && t < a[j]; j--){

a[j + 1] = a[j];

}Worst-case

- a[0 : i-1] = [1, 2, 3, 4] and t = 0

- 4 compares

- a[0 : i-1] = [1, 2, 3, …, i] and t = 0

- i compares

For a list in decreasing order, the total compares will be

Best-case

- a[0 : i-1] = [1, 2, 3, 4] and t = 5

- 1 compare

- a[0 : i-1] = [1, 2, 3, …, i] and t = i + 1

- 1 compare

For a list in increasing order, the total compares will be

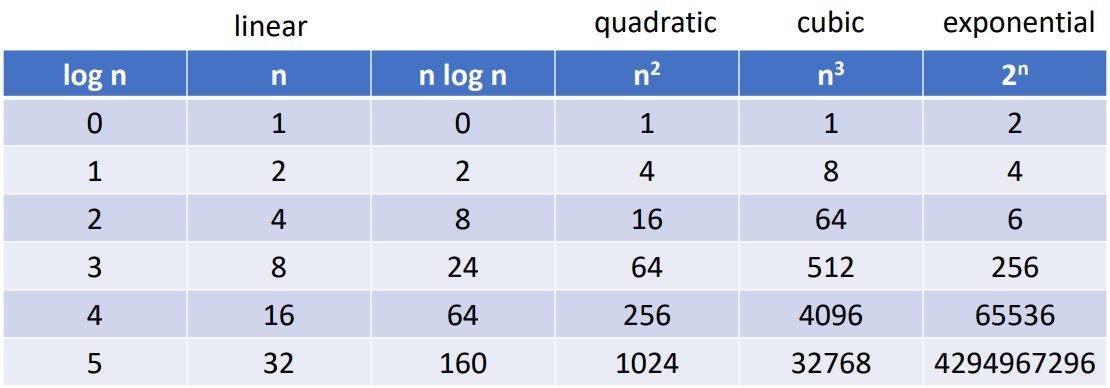

Asymptotic Complexity

Sometimes determining exact step counts is difficult and not useful, so we will use the Big-O notation to represent space and time complexity. Here’s a question, which algorithm is better? The one with or . The answer is, both of them are , so the performances are similar.

Now, we jump back to the example in previous part. What is the time complexity of insertion sort in the Big-O representation?

- Worst case

- Best case

Why Algorithm Is Important

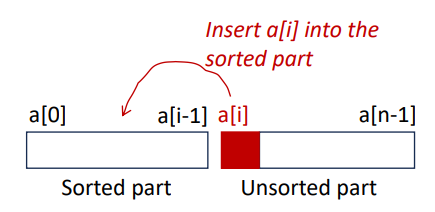

This is a table of how various functions grow with .

As you can see, the exponential one grows exponentially, which means a little variation of n can increase a lot of computing time. In fact, on a computer performing 1 billion (109 ) steps per second. steps require 18.3 mins, steps require 13 days, and steps require 310 years! So that’s why improving algorithm may be more useful than improving hardware sometimes.

Summary

- Space and time complexity

- Best case, worst case, and average

- Variable and fixed requirements

- Asymptotic complexity

- Big-O

- Example:

- Insertion sort

- Selection sort

More information about complexity will be introduce when I take the algorithm course next year, or you can search for some sources if you really want to learn more about it.

Arrays

Intro

It’s a collection of elements, stored at contiguous memory locations. Each element can be accessed by an index, which is the position of the element within the array. It’s the most fundamental structure and the base of other structures.

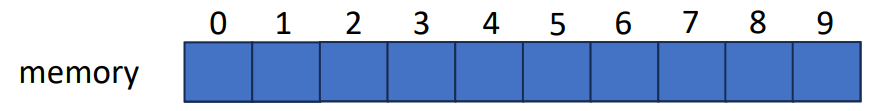

1-D Array

This is an 1-D array with length 10. We can access the first element by the index 0 (zero-indexing). But what should we do if the memory size we need is changing and not always the same? Will it be suitable for all kind of situation?

The answer is negative, and that’s why we need something called Dynamic Memory Allocation. When we need an area of memory and do not know the size, we can call a function malloc() and request the amount we need. The following is an code implementation of a dynamic 1-D array storing integers.

int size;

scanf("%d", &size); // Ask an arbitrary positive number as the size of the array

int *array = (int *)malloc(size * sizeof(int)); // Finish the memory allocation

// Do operations to the array here

free(array); // Free the memory after use- The

free()function deallocates an area of memory allocated bymalloc().

So, that’s how to implement the dynamic 1-D array in C. Now, here comes another question. What if we want to store some data like the student ID and his exam scores? Should we store it in an 1-D array?

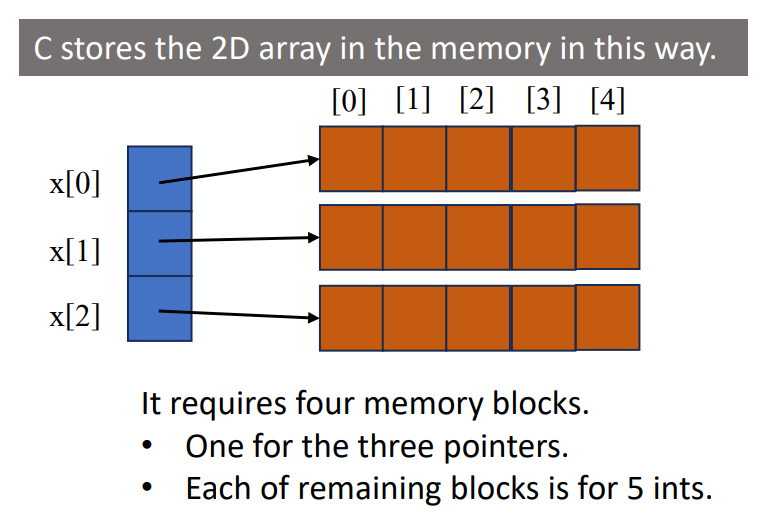

2-D Array

To answer the question above, we can choose 2-D array as the data structure to store something like that. C uses array-of-array representation to represent multidimensional array. The following is an example diagram to show how the things work in an 2-D array in C.

To be more specific, it shows that the first dimension of the array (blue part) stores the pointers that pointing to the second dimension arrays (orange part), which are storing integer or other data types. Following is an example code of a dynamic 2-D array.

int rows, cols;

// Get the dynamic size

scanf("%d", &cols);

scanf("%d", &rows);

// Allocate the first dimension (blue part in the diagram above)

int **array = (int **)malloc(cols * sizeof(int *));

// For every rows, allocate the rows size for the row (orange part)

for (int i = 0; i < cols; i++) {

array[i] = (int *)malloc(rows * sizeof(int));

}Row-Major and Column-Major

To know what is row-major & column-major, we can take a look at this example. If we have a matrix called A.

- Column-major

- Elements of the columns are contiguous in memory

- Top to bottom, left to right

- Matlab

- Fortran

- Row-major

- Elements of the rows are contiguous in memory

- Left to right, top to bottom

- C

- C++

Stacks

Intro

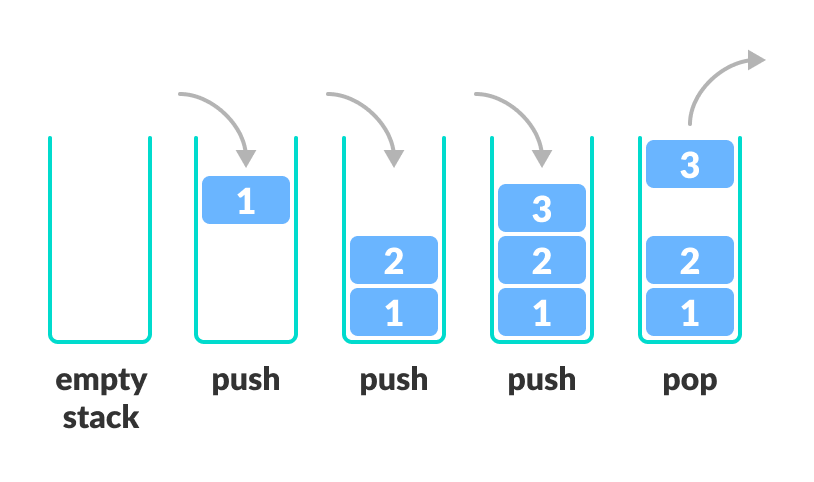

Stack is also an fundamental structure that is opposite from Queue (will be mentioned later), it has some properties

- Last-In-First-Out (LIFO)

- Like a tennis ball box

- Basic operations

- Push: Insert an element to the top of the stack

- Pop: Remove and return the top element in the stack

- Peek/Top: Return the top element in the stack

- IsEmpty: Return true if the stack is empty

- IsFull: Return true if the stack is full

The following is the diagram of the push and pop operation of stacks.

Application: Balanced Brackets

- Check whether the brackets are balanced.

- Examples:

- “]” → False

- “(a+b)*c-d” → True

- Steps:

- Scan the expression from left to right

- When a left bracket, “[”, “{”, or “(”, is encountered, push it into stack.

- When a right bracket, “]”, “}”, or “)”, is encountered, pop the top element from stack and check whether they are matched.

- If the right bracket and the pop out element are not matched, return False.

- After scanning the whole expression, if the stack is not empty, return False.

Dynamic Stacks

If we want to dynamically allocate the size of the stack, we should still use malloc(). Here’s an example using dynamic arrays.

- Headers and declarations

int *stack;

int capacity = 1; // Initial capacity

int top = -1; // Representing empty stack- Create stack

void createStack() {

stack = (int*)malloc(capacity * sizeof(int));

if (stack == NULL) {

printf("Memory allocation failed\n");

exit(1);

}

}- Array doubling when stack is full

void stackFull() {

capacity *= 2; // Double the capacity

stack = (int*)realloc(stack, capacity * sizeof(int));

if (stack == NULL) {

printf("Memory allocation failed\n");

exit(1);

}

printf("Stack expanded to capacity: %d\n", capacity);

}- Check if the stack is empty

int isEmpty() {

return top == -1;

}- Push

void push(int value) {

if (top == capacity - 1) { // If the stack is full, double it

stackFull();

}

stack[++top] = value;

}- Pop

int pop() {

if (isEmpty()) {

printf("Stack underflow. No elements to pop.\n");

return -1;

}

return stack[top--];

}- Peek (or Top)

int peek() {

if (isEmpty()) {

printf("Stack is empty.\n");

return -1;

}

return stack[top];

}Queues

Intro

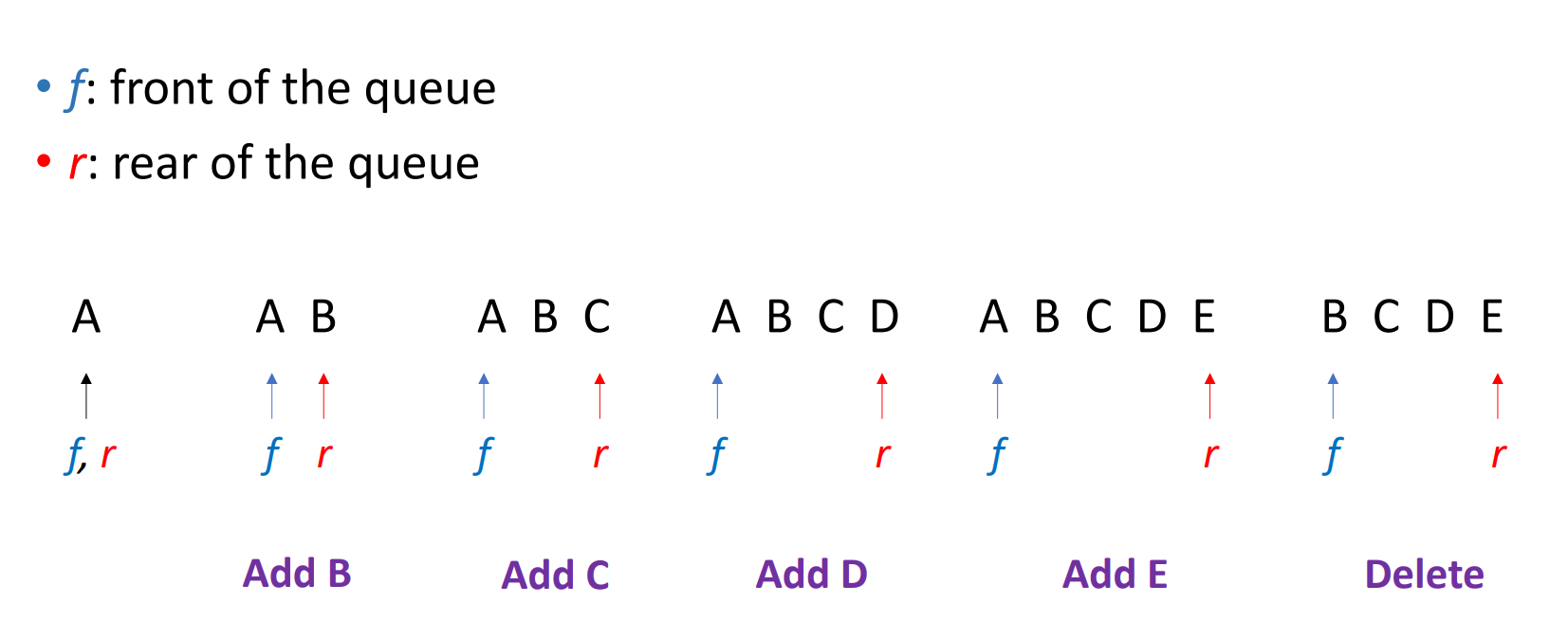

As I mentioned, queues are the opposite of stacks. That is because although they are both linear or ordered list, they have totally different properties.

- First-In-First-Out (FIFO)

- New elements are added at the rear end

- Old elements are deleted at the front end.

- Basic operations

- Enqueue

- Insert element at the rear of a queue

- Dequeue

- Remove element at the front of a queue

- IsFull

- Return true if the queue is full. (

rear == MAX_QUEUE_SIZE - 1)

- Return true if the queue is full. (

- IsEmpty

- Return true if the queue is empty (

front == rear)

- Return true if the queue is empty (

- Enqueue

The following is the diagram of the insertion and deletion of the queues.

If now e have a queue that contains 5 elements but has the length 6 (null stands for no element there).

After we delete an element from the front and add another one at the rear, it will be like this.

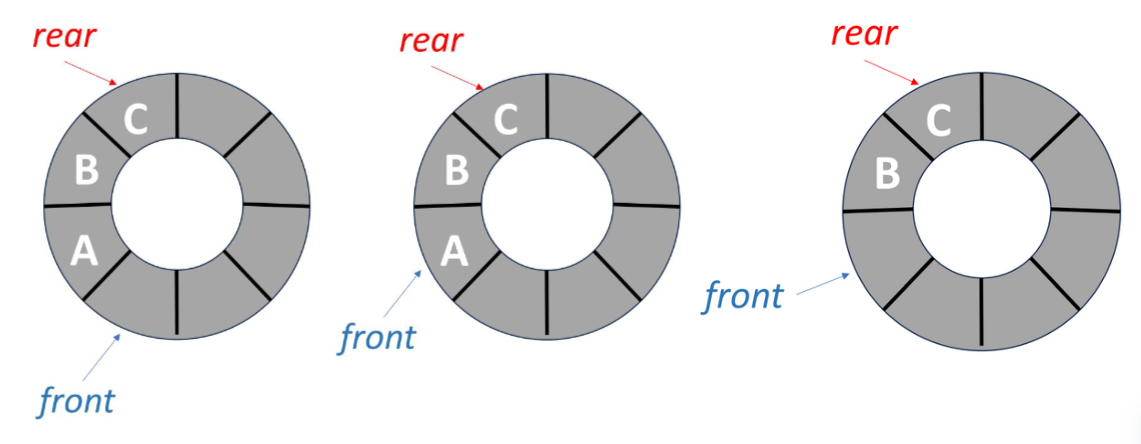

The queue will be regarded as full since rear == MAX_QUEUE_SIZE, but we know that actually some empty space remains. So, how to fix it? We can think about when a customer at front of the line leaves, what will other customers do?

They move forward. So, we should shift other elements forward after deletion. That way, the queue became

Voila! We fix it! But if we do this at every deletion, the program will be way too slow. So here’s the next question, what should we do to fix it?

Circular Queue

In a circular queue, the front and the rear will have some differences comparing with the normal queue.

- front: One position counterclockwise from the position of the front element.

- rear: The position of the rear element.

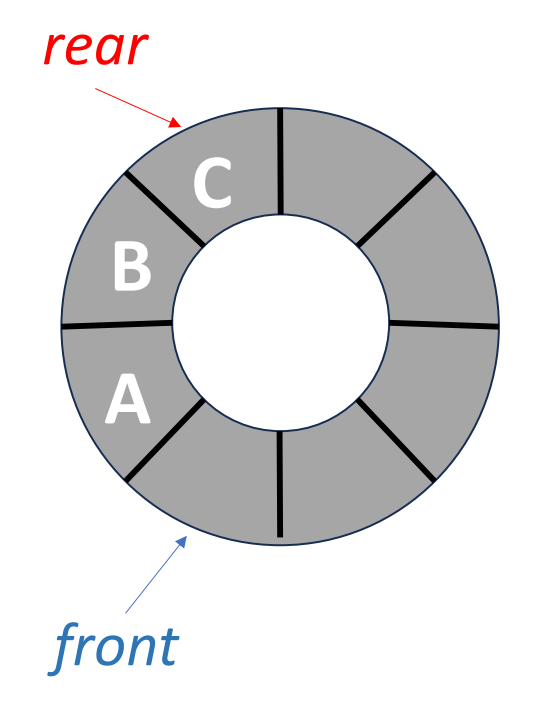

This is how it looks like.

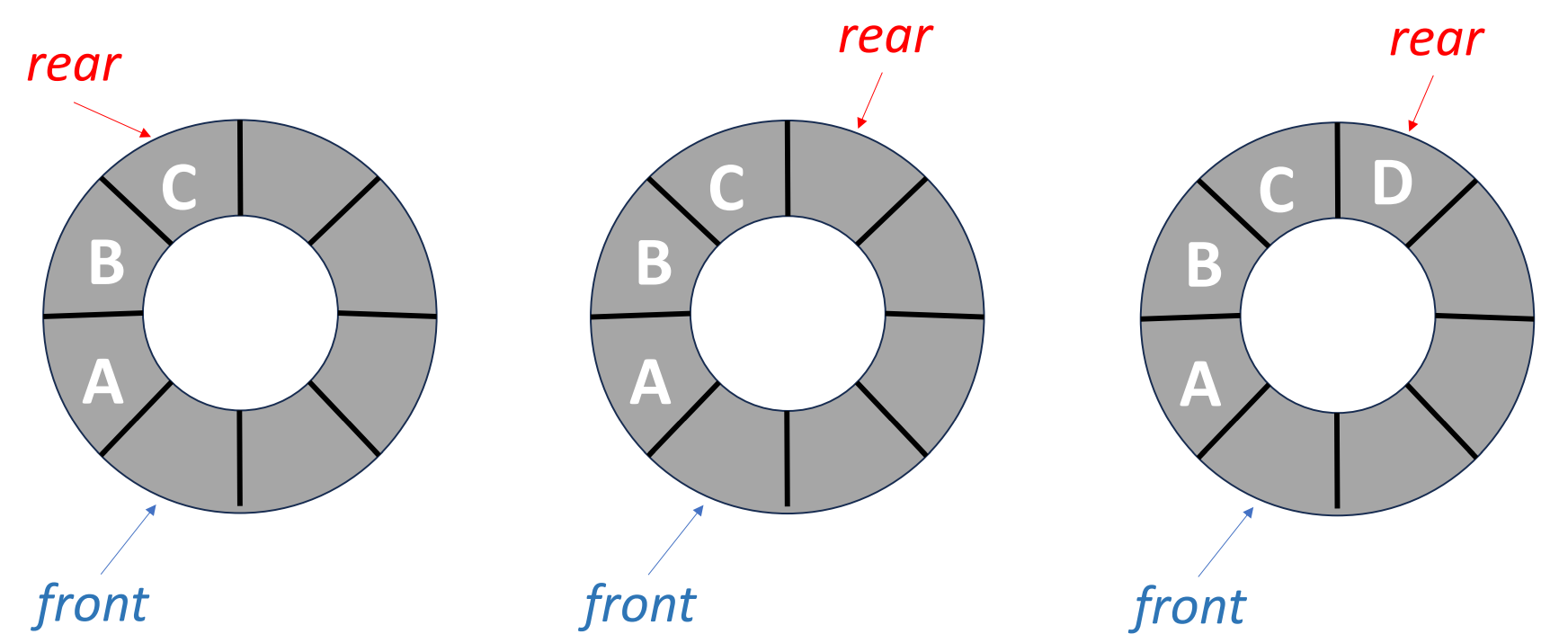

The addition or insertion in an circular queue has the following steps:

Move rear one position clockwise:

rear = (rear + 1) % MAX_QUEUE_SIZEPut element into

queue[rear]

And the deletion steps are below:

Move front one position clockwise:

front = (front + 1) % MAX_QUEUE_SIZEReturn and delete the value of

queue[front]

In a circular queue, how to know a queue is full? We can think about when a queue is keep adding elements until it’s full, what will the front and rear go? The answer is, when elements are keep being added to a circular queue, the rear will keep going clockwise and finally equals to the front.

On the other hand, we can also use this to determine if the queue is empty. Because if the elements in the queue are keep being deleted, the front will keep moving clockwise until it equals to rear.

So if the condition to determine the full and empty are the same (front == rear), how can we tell which is which?

Here’s some of the solutions, but there are still a lot of ways to conquer this.

- Set a

LastMoveIsFront()boolean value to recognize the last move is front or rear. - Set another variable

countand modify it everytime we delete or add something. - Save an empty slot in the circular queue, so that when the queue is full, the rear will be at the position “just before the front” (because one empty slot is reserved), thus avoiding the confusion with the condition

rear == frontwhich indicates an empty queue.

Linked Lists

Intro

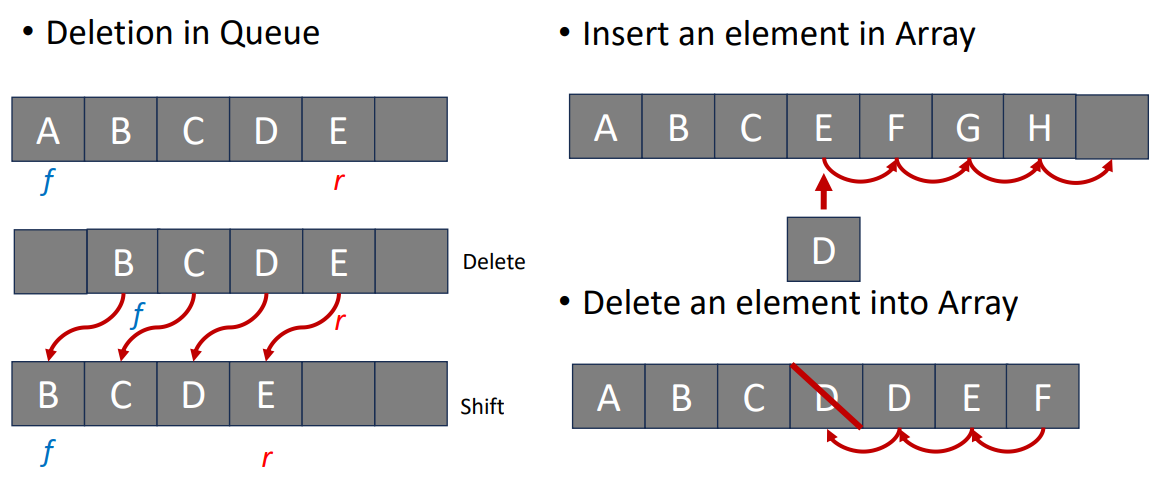

First, I want to talk about the deletion and insertion in sequential representation, which is mentioned a lot in the previous parts. When we deleting or inserting things in a queue or an array, there’re always be some empty spaces created by the operations (See the picture below for details.) And that means we have to move many elements many times to maintain a sequential data structure.

So, to avoid this waste, we want to store data dispersedly in memory, just like the picture below.

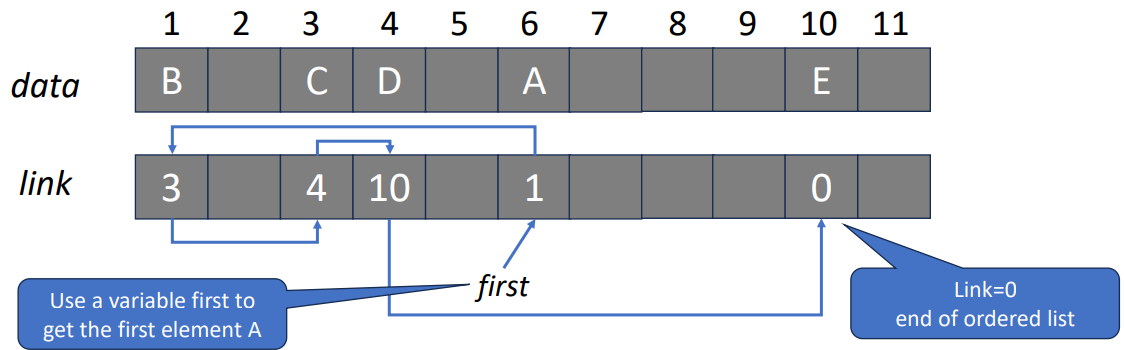

But now, we are facing another problem. How do we know the order of the data if we store an sequential data like this? We use linked list! Let’s start talking about linked list right away. Here is the features of it.

- In memory, list elements are stored in an arbitrary order.

- The fundamental unit is called a node.

- In a normal linked list, a node contains data and link/pointer.

- The link is used to point to the next element.

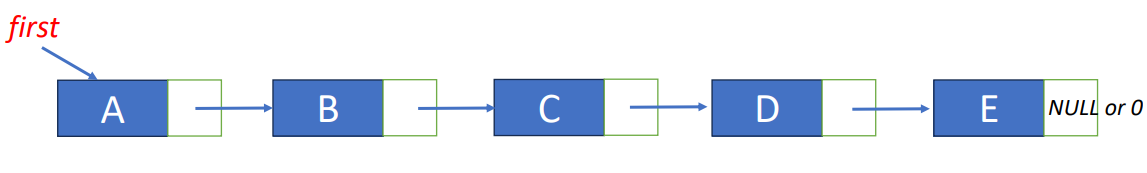

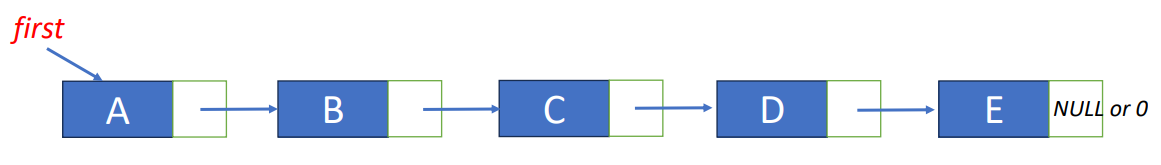

To answer the previous question, we need to talk about why linked list is called linked list. It’s because every element in the list linked to the very next data, so we can know the sequence even thought we didn’t save them sequentially. To let you better understand, the following graph is the illustration of how it works.

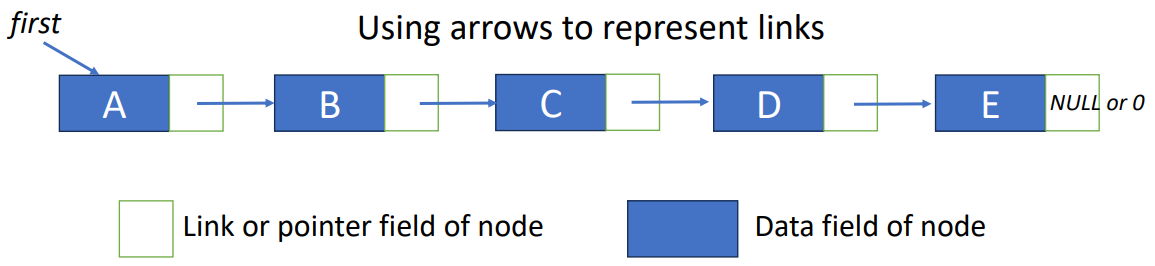

Another way that usually seen to draw a linked list is like this.

Operations

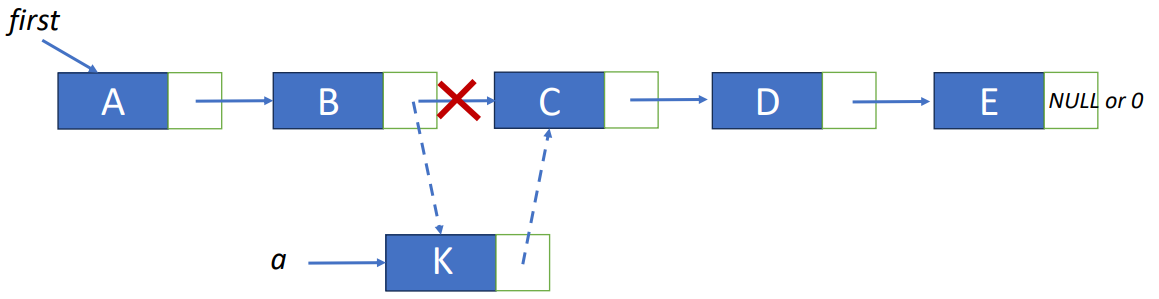

Concept of Insertion

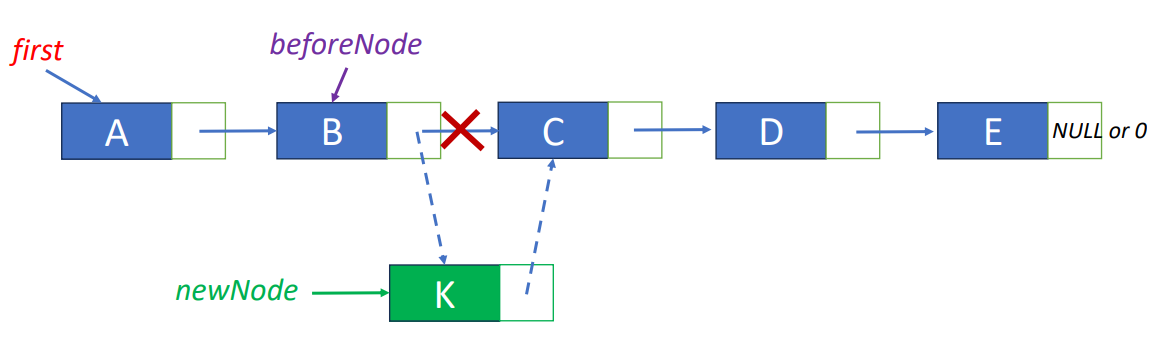

To insert K between B and C, we have to follow the steps below.

- Get an unused node a.

- Set the data field of a to K.

- Set the link of a to point to C.

- Set the link of B to point to a.

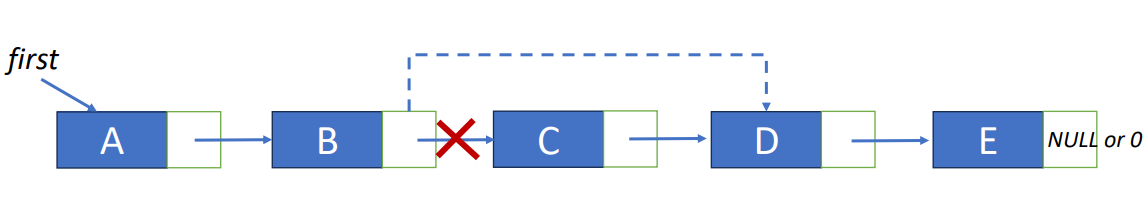

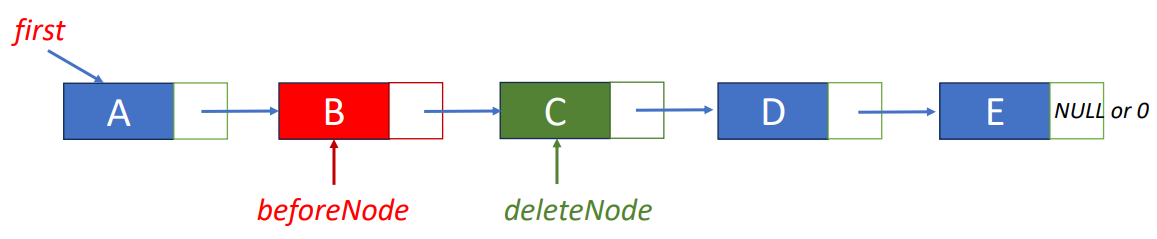

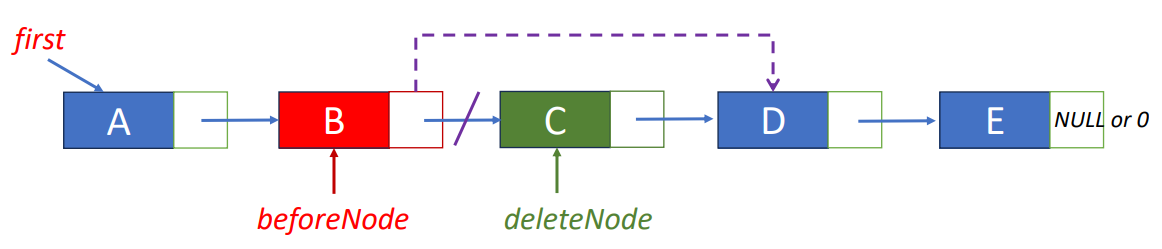

Concept of Deletion

To delete C in the linked list, we have to follow the steps below.

- Find the element precedes C.

- Set the link of the element to the position of D.

Define A Node in C

typedef struct listNode *listPointer;

typedef struct {

char data;

listPointer link;

} listNode;Get(0)

desiredNode = first; // get the first node

return desiredNode->data;Get(1)

desiredNode = first->link; // get the second node

return desiredNode->data;Delete “C”

- Step 1: find the node before the node to be removed

beforeNode = first->link;- Step 2: save pointer to node that will be deleted

deleteNode = beforeNode->link;- Step 3: change pointer in beforeNode

beforeNode->link = beforeNode->link->link;

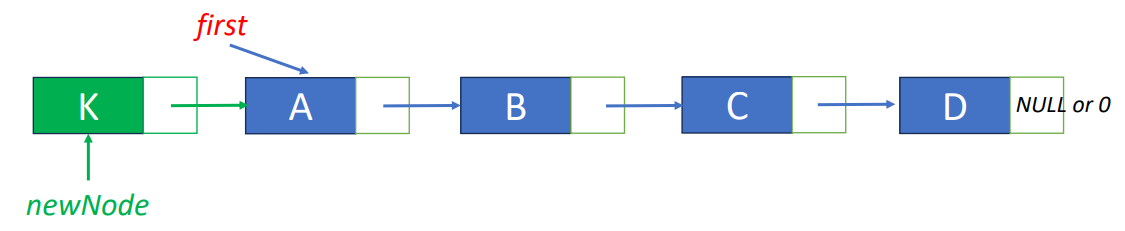

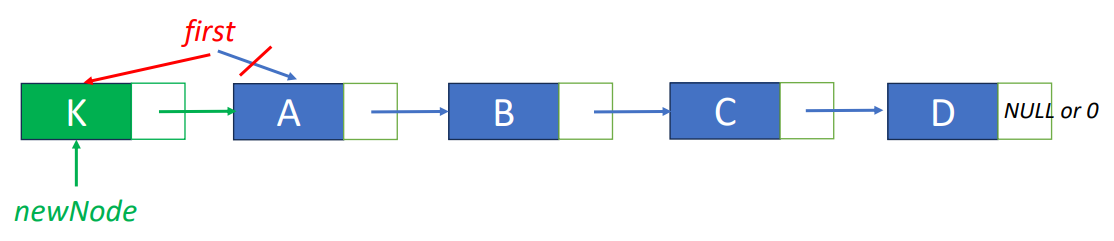

free(deleteNode);Insert “K” before “A"

- Step 1: get an unused node, set its data and link fields

MALLOC( newNode, sizeof(*newNode));

newNode->data = ‘K’;

newNode->link = first;- Step2: update first

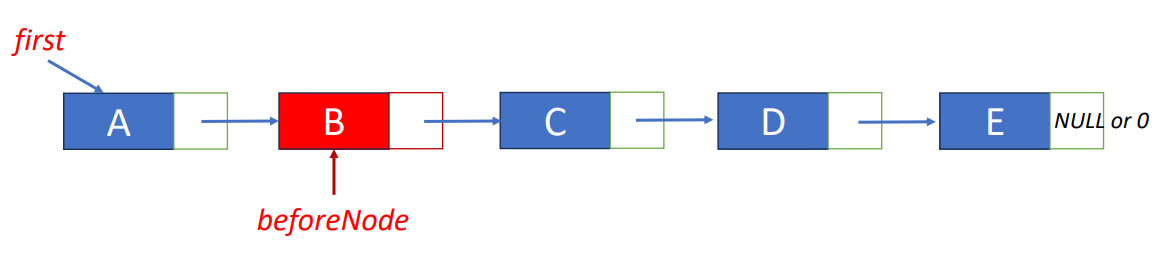

first = newNodeInsert “K” after “B”

beforeNode = first->link;

MALLOC( newNode, sizeof(*newNode));

newNode->data = ‘K’;

newNode->link = beforeNode->link;

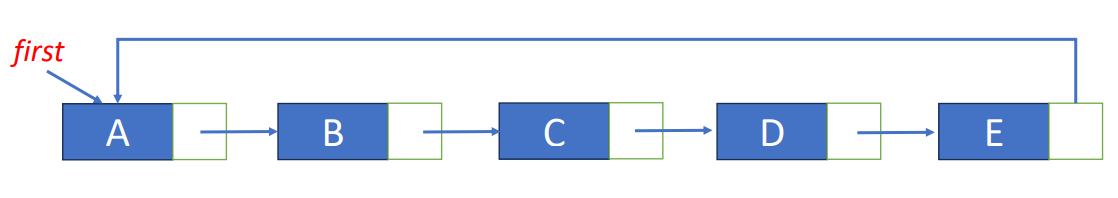

beforeNode->link = newNode;Circular Linked List

- The last node points to the first node.

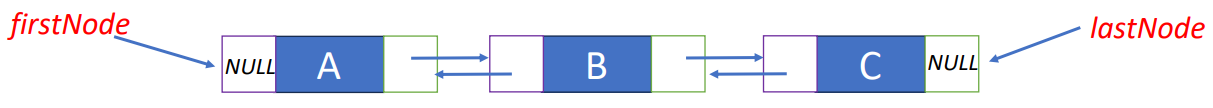

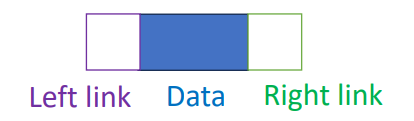

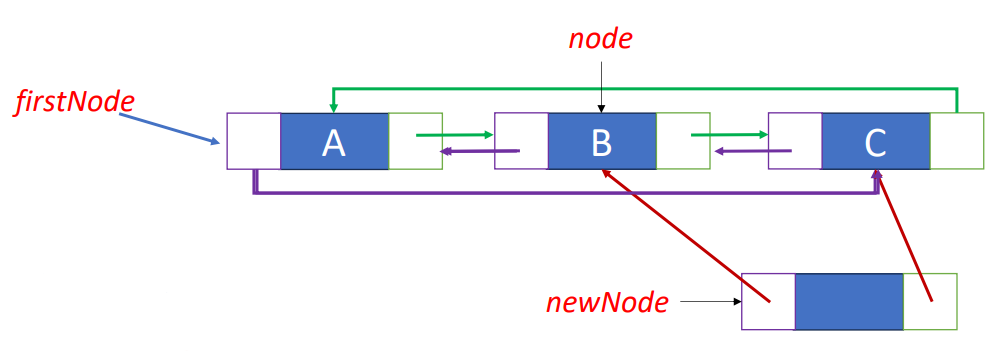

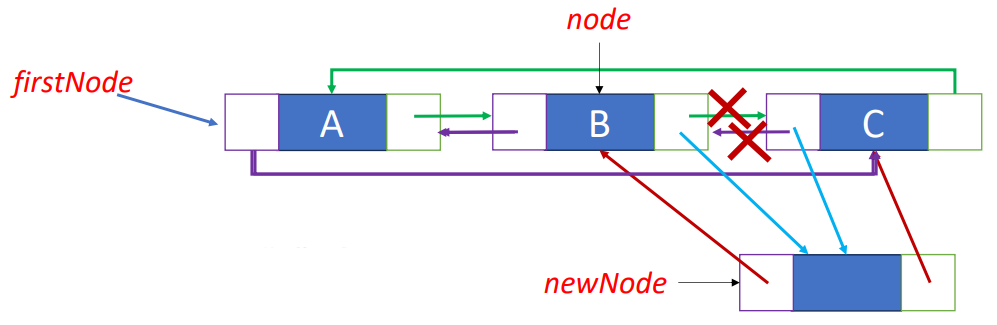

Doubly Linked List

In general linked list, to find an element will always start at the beginning of the list, but it’s not the case in doubly linked list!

- Right link: forward direction

- Left link: backward direction

Node in Doubly Linked List

typedef struct node *nodePointer;

typedef struct node{

nodePointer llink;

element data;

nodePointer rlink;

};Insert “K” after “B”

newNode->llink = node;

newNode->rlink = node->rlink;node->rlink->llink = newNode;

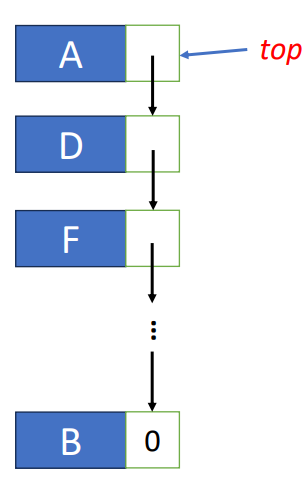

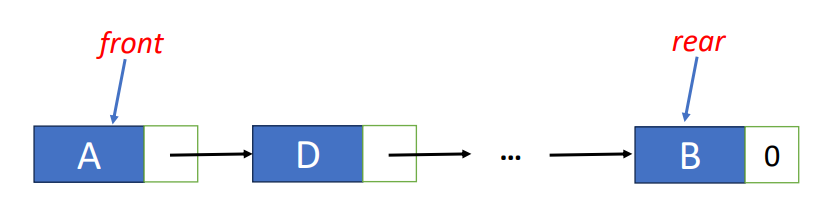

node->rlink = newNode;Linked Stacks & Queues

- Linked stack

- Push: add to a linked list

- Pop: delete from a linked list

- Linked queue

- AddQ: add to a rear of a linked list

- DeleteQ: delete the front of a linked list

Summary

- Linked lists

- Doubly linked lists

- Doubly circular linked lists

- Operations: Get, Insert, and Delete

- Applications:

- Linked stacks

- Linked queues

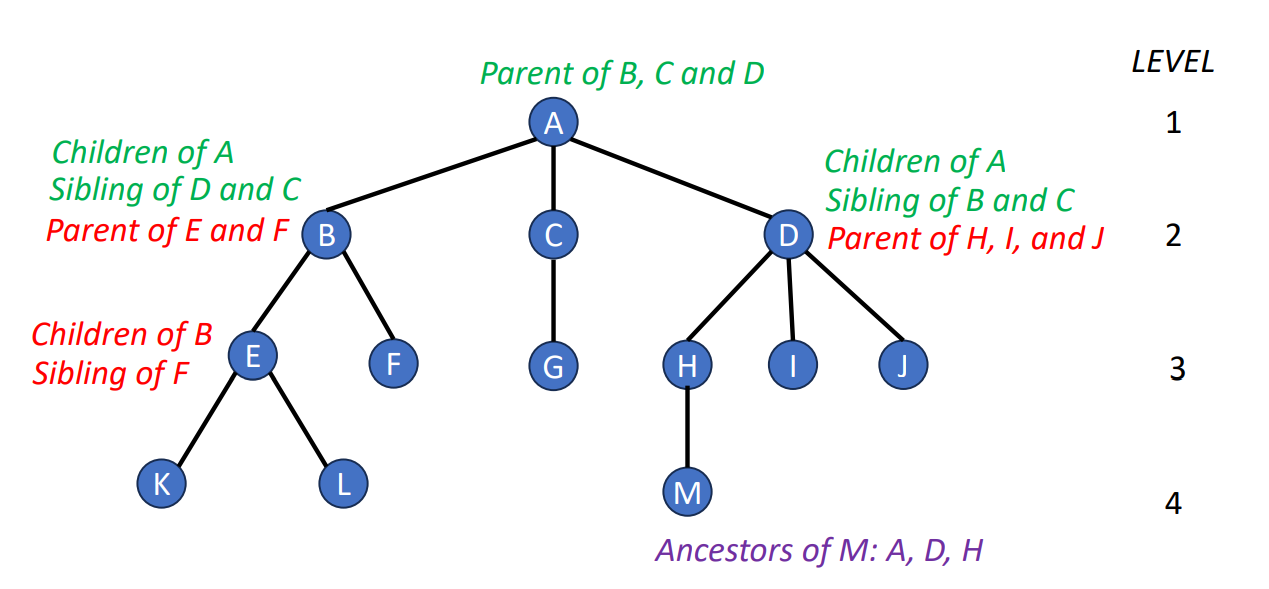

Trees & Binary Trees

Intro

Tree is an data structure that looks like a genealogy, so there’s a lot of terms that similar to the terms in a family. Now, let’s introduce some terms about the trees.

- Degree of a node

- number of subtrees

- Degree of a tree

- maximum of the degree of the nodes in the tree

- Height (depth) of a tree

- maximum level of any node in the tree

- Leaf (or Terminal)

- nodes having degree zeros

- Children

- roots of subtrees of a node X

- Parent

- X is a parent

- Sibling

- children of the same parent

- Ancestor

- all the nodes along the path from the root to the node

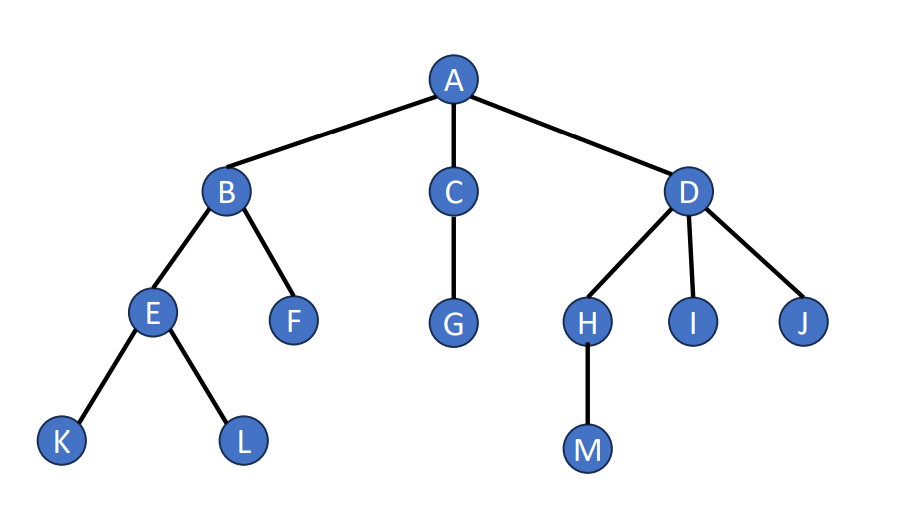

List Representation

If we want to represent a tree in a list format, we can use this mathod. Following is an example of a tree and its list representation.

It’s list representation will be like .

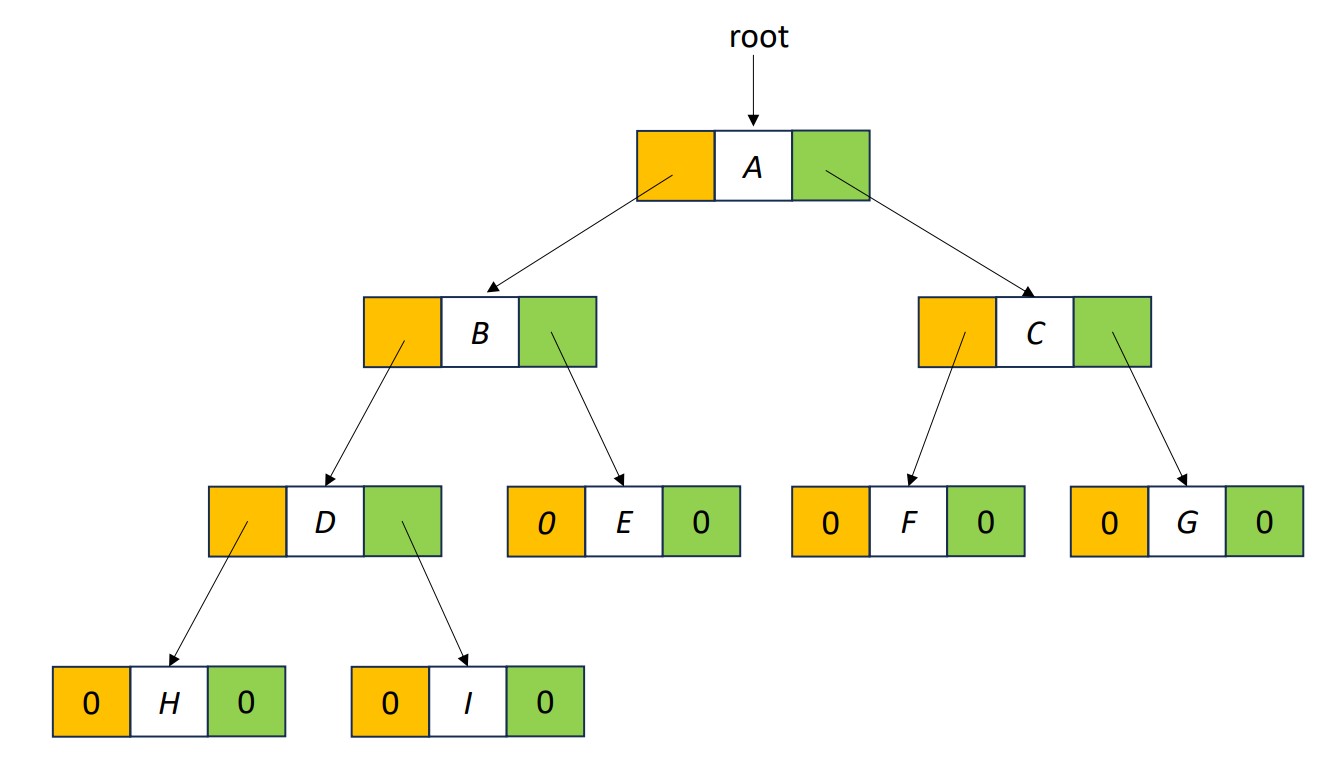

Binary Trees

Binary trees are trees that have the following characteristics.

- Degree-2 tree

- Recursive definition

- Each node has left subtree and/or right subtree.

- Left subtree (or right subtree) is a binary tree.

This is an picture of a binary tree.

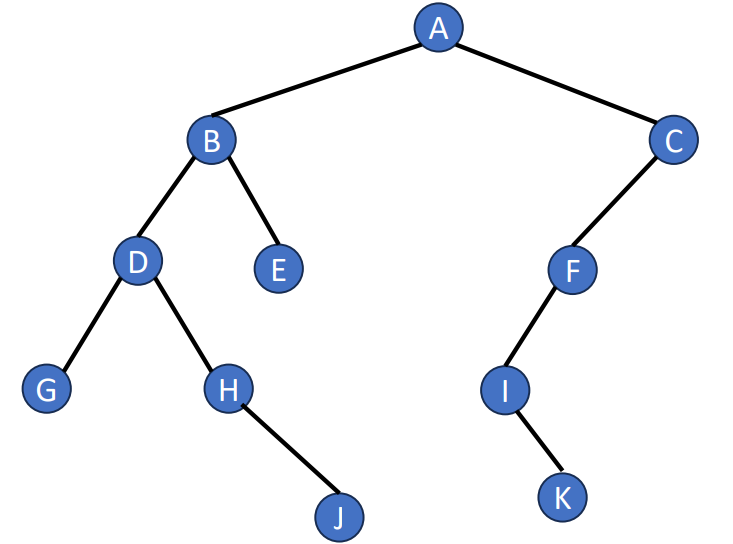

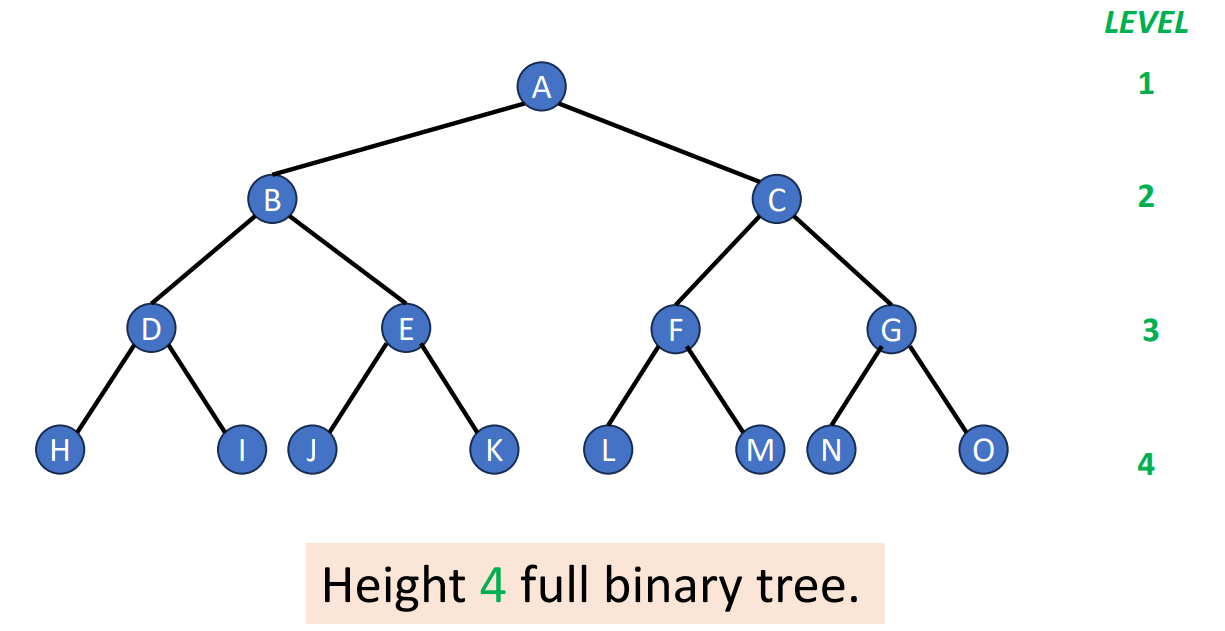

Full Binary Trees & Perfect Binary Trees

- Full Binary Tree

- A full binary tree is a binary tree with either or child nodes for each node

- Perfect Binary Tree

- A full binary tree

- A tree that has height and nodes

Please note that each perfect binary tree of the same height will only have one type. If we numbering the nodes in a perfect binary tree from top to bottom, left to right, then we will have the following properties.

- Let be the number of nodes in a perfect binary tree

- Parent node of is node

- Left child of node is node

- Right child of node is node

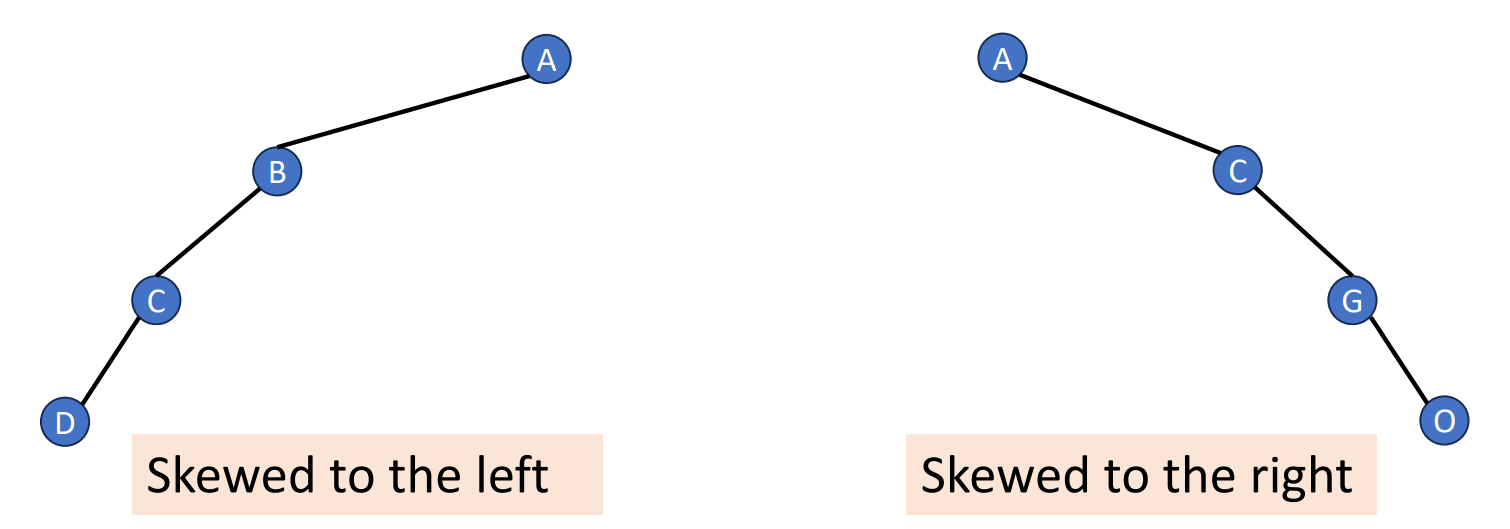

Skewed Binary Trees

- At least one node at each of first levels

- Minimum number of nodes for a height

Number of Nodes and Height

- Let be the number of nodes in a binary tree whose height is .

- The height of a binary tree is at least

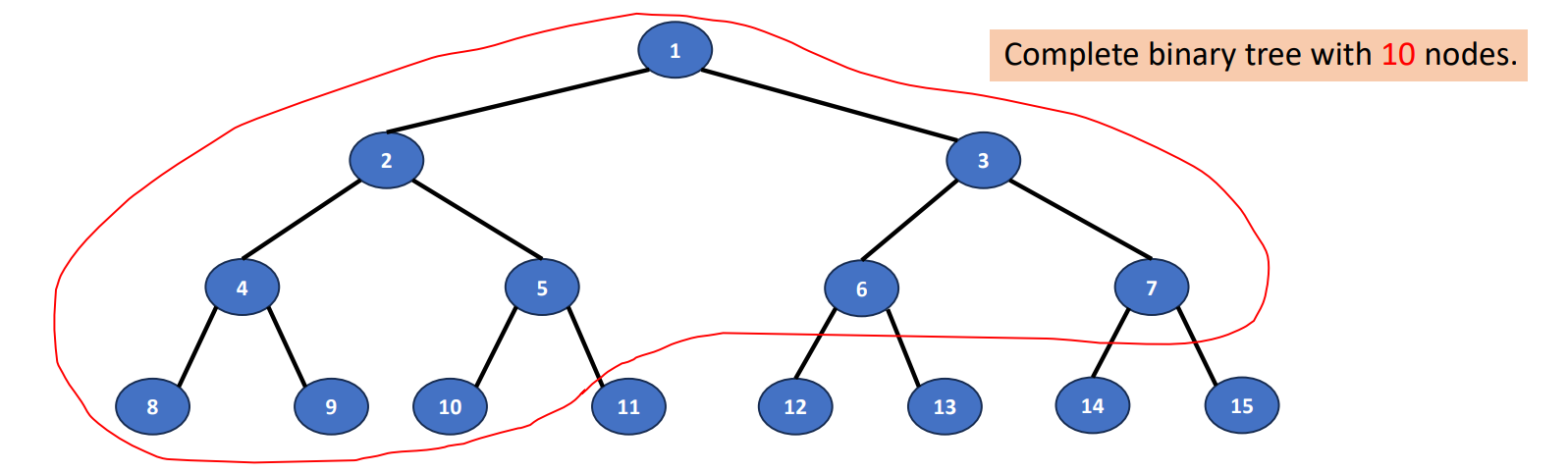

Complete Binary Trees

How to create a complete binary tree? Just follow the steps and check out the graph below.

- Create a full binary tree which has at least nodes

- Number the nodes sequentially

- The binary tree defined by the node numbered through is the n-node complete binary tree

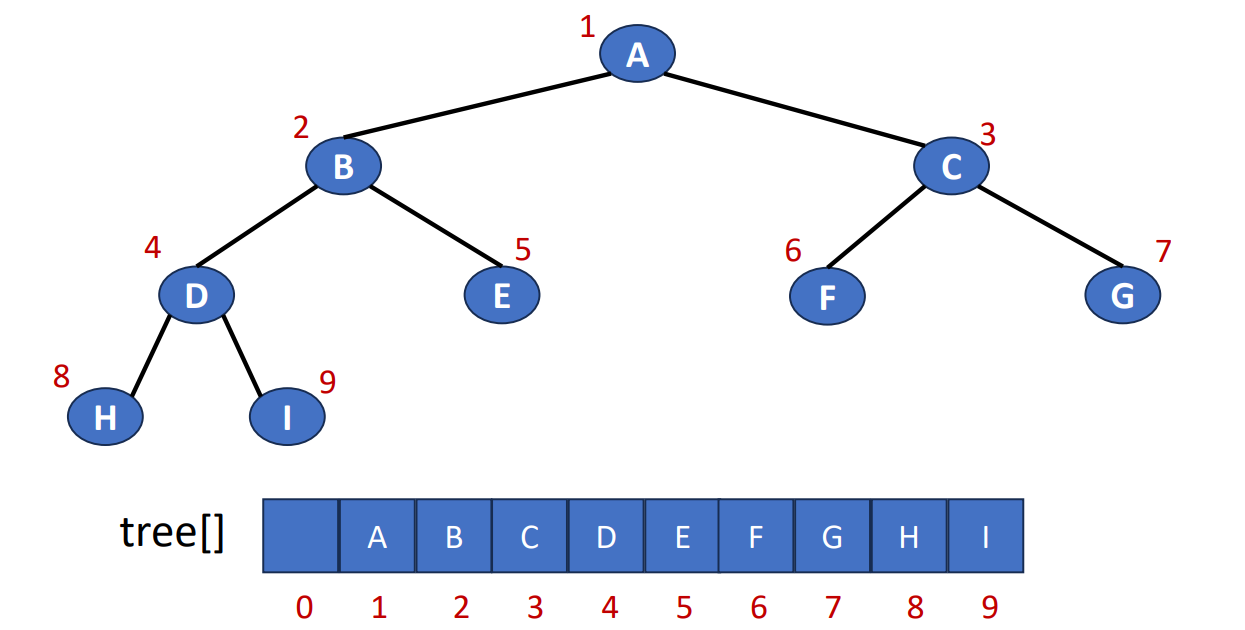

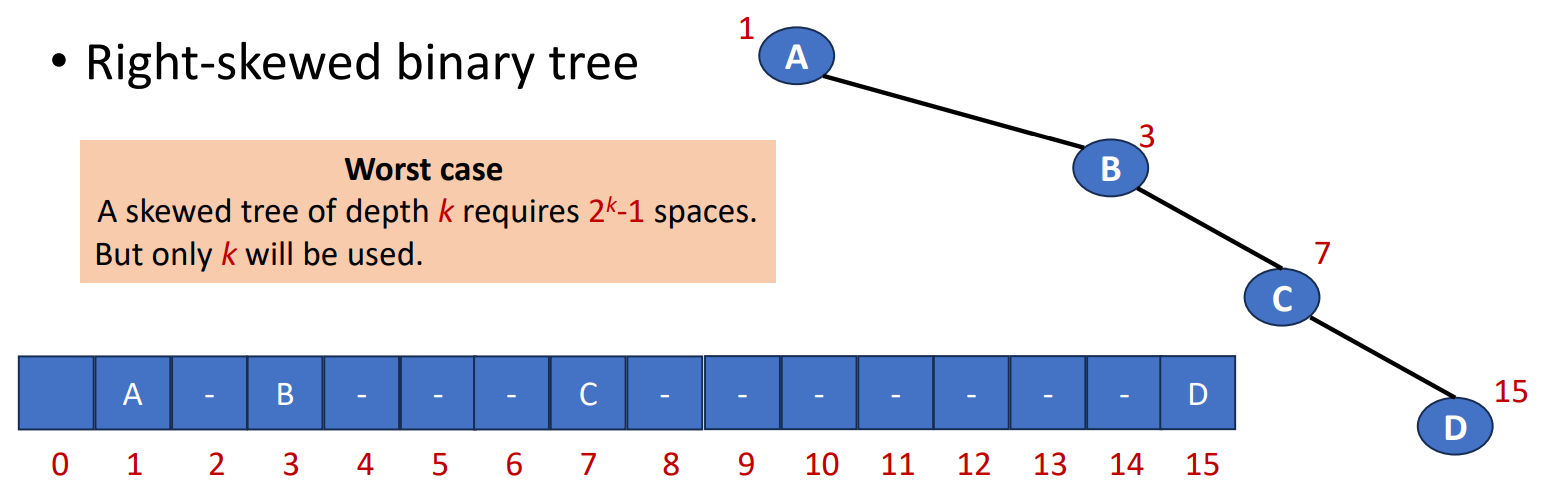

Binary Tree Representations

Array Representation

- Number the nodes using the numbering scheme for a full binary tree. The node that is numbered

iis stored intree[i].

Since the number should be placed in the FULL binary tree, sometimes there will be some memory waste. For example, if we create a 4-level right-skewed binary tree, it will has the length 15, but only 4 being used.

In array representation, the tree will has the following properties.

- Let be the number of nodes in a tree

- Parent node of is node

- Left child of node is node

- Right child of node is node

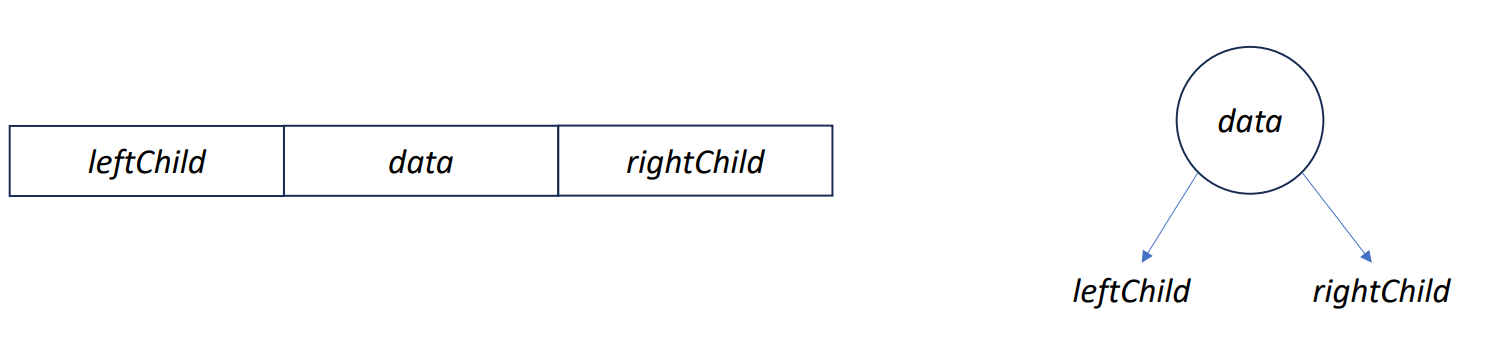

Linked Representation

- Each binary tree node is represented as an object whose data type is

TreeNode. - The space required by an

nnode binary tree isn * (space required by one node).

typedef struct node *treePointer;

typedef struct node{

char data;

treePointer leftChild, rightChild;

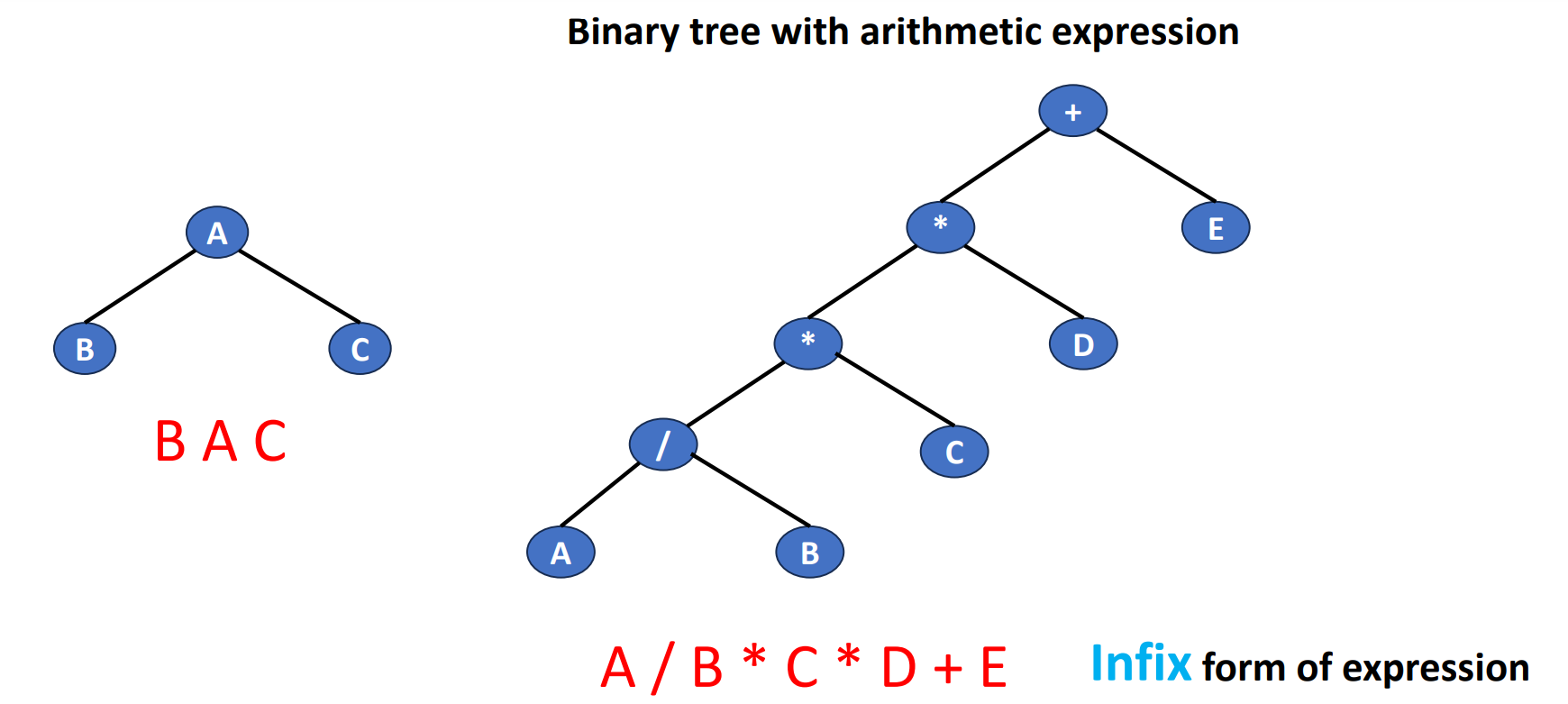

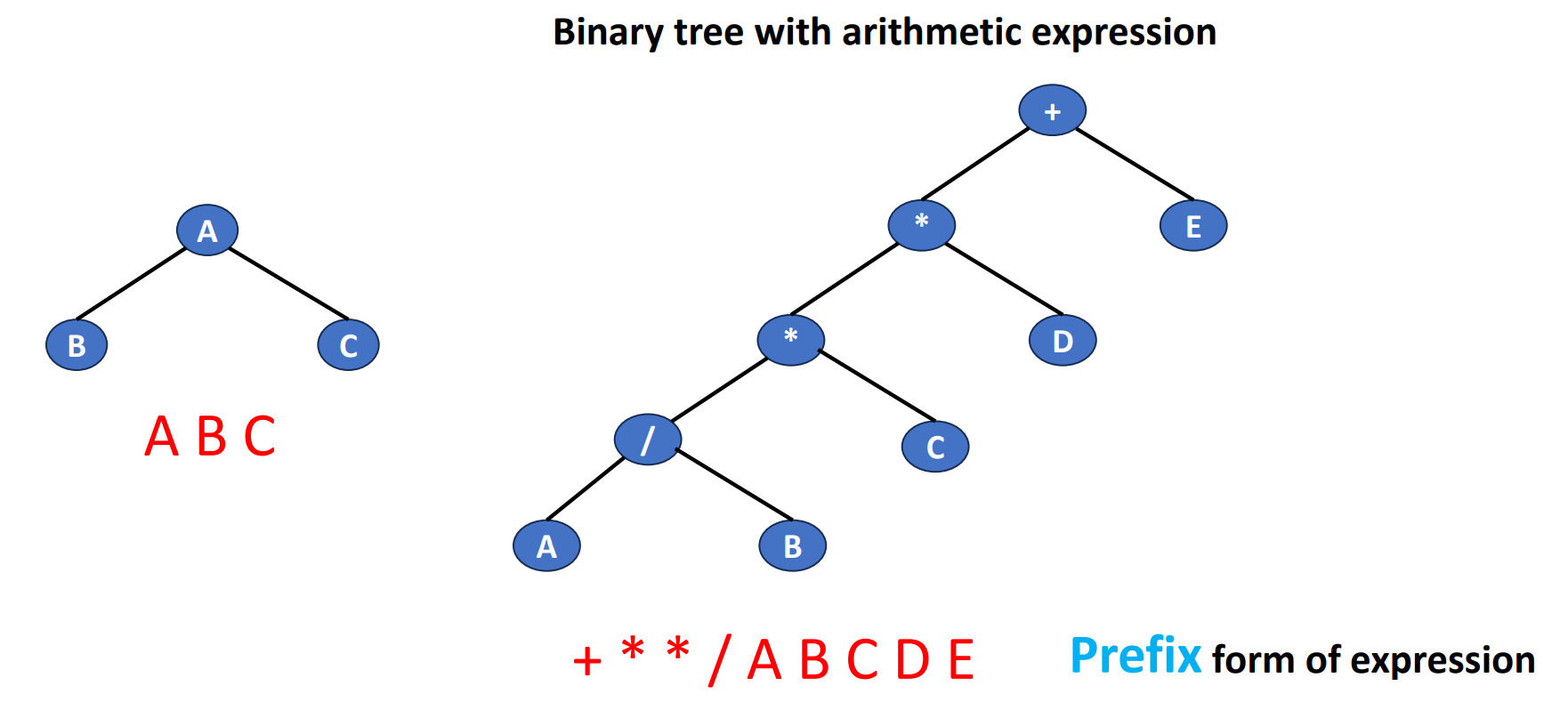

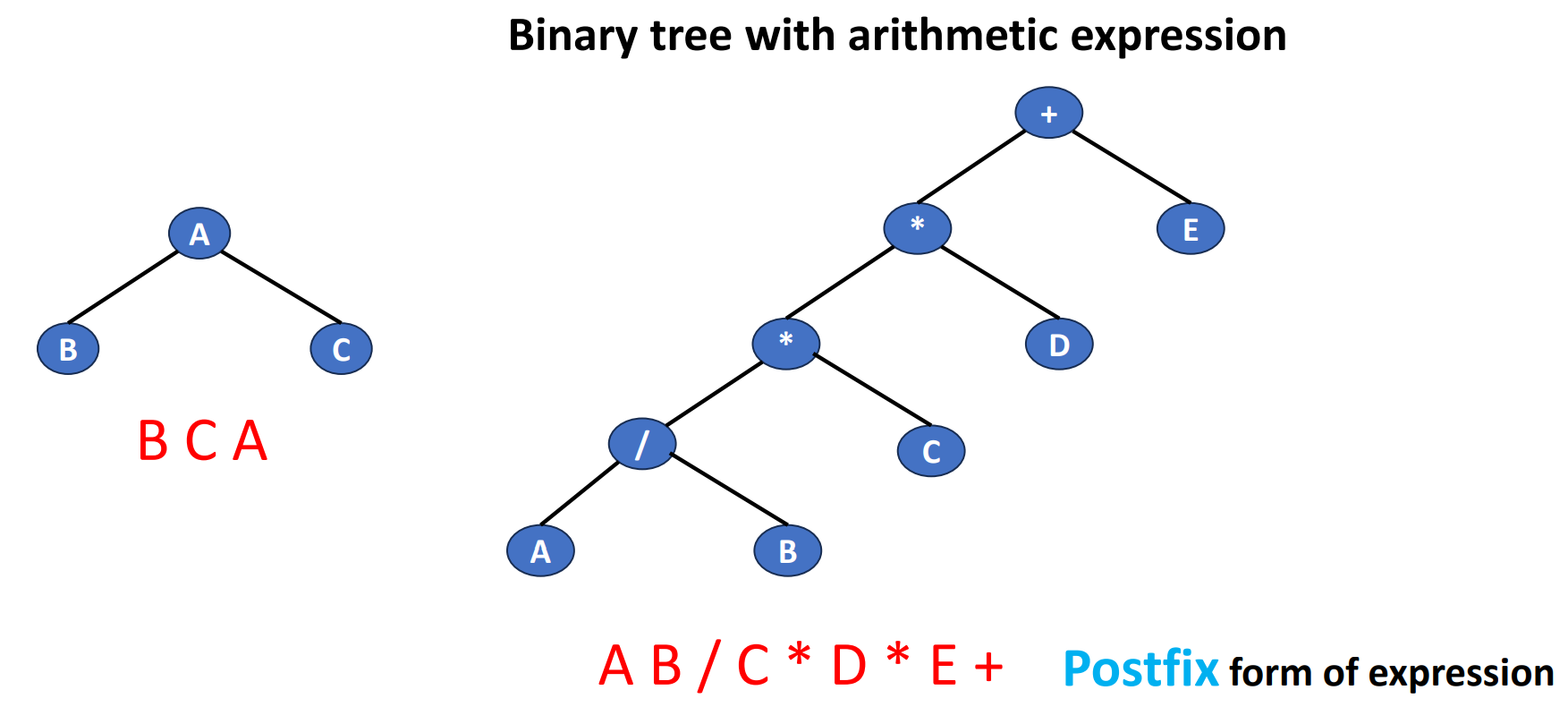

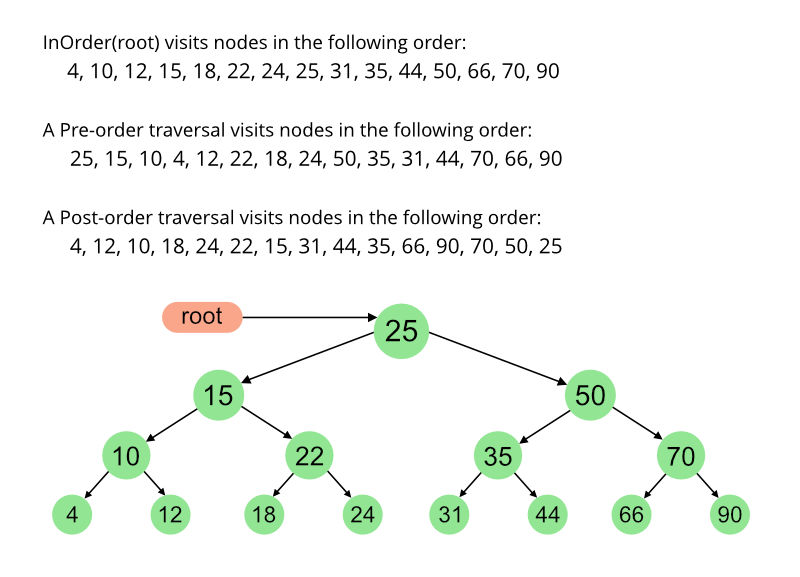

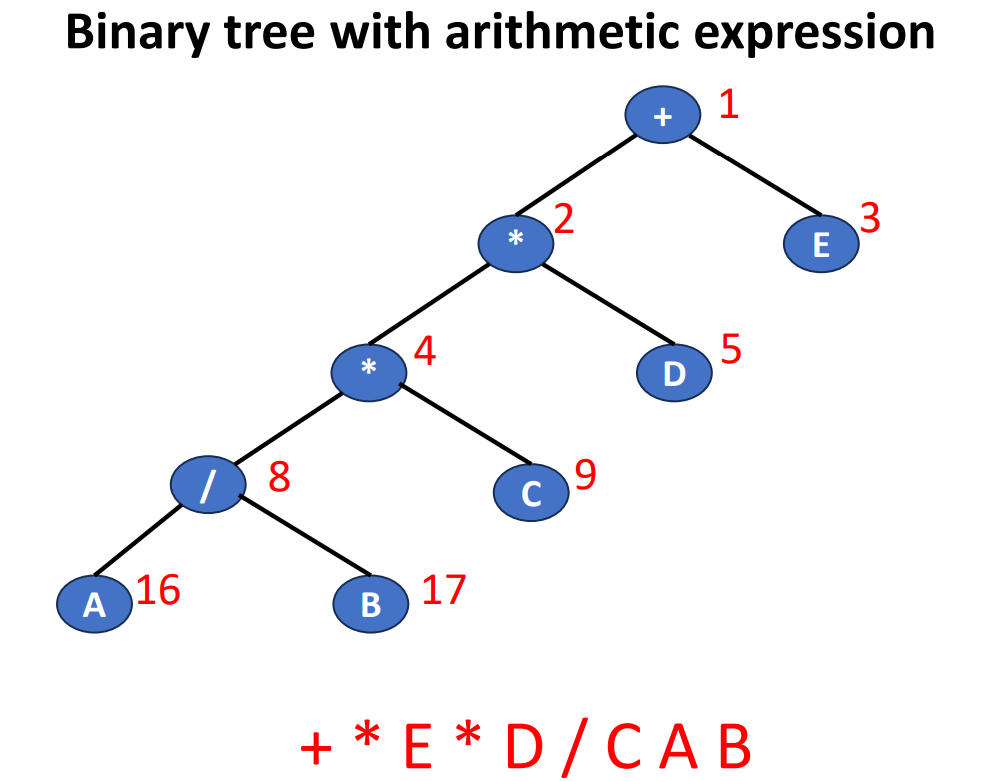

};Binary Tree Traversal

- Visiting each node in the tree exactly once

- A traversal produces a linear order for the nodes in a tree

- LVR, LRV, VLR, VRL, RVL, and RLV

- L

- moving left

- V

- visiting the node

- R

- moving right

- L

- LVR

- Inorder

- VLR

- Preorder

- LRV

- Postorder

- LVR, LRV, VLR, VRL, RVL, and RLV

Inorder

void inOrder(treePointer ptr){

if (ptr != NULL){

inOrder(ptr->leftChild);

visit(ptr);

inOrder(ptr->rightChild);

}

}Preorder

void preOrder(treePointer ptr){

if (ptr != NULL){

visit(t);

preOrder(ptr->leftChild);

preOrder(ptr->rightChild);

}

}Postorder

void postOrder(treePointer ptr){

if (ptr != NULL){

postOrder(ptr->leftChild);

postOrder(ptr->rightChild);

visit(t);

}

}Difference Between Inorder, Preorder & Postorder

This is an illustration from GeeksforGeeks.

Level-Order

- Visiting the nodes following the order of node numbering scheme (sequential numbering)

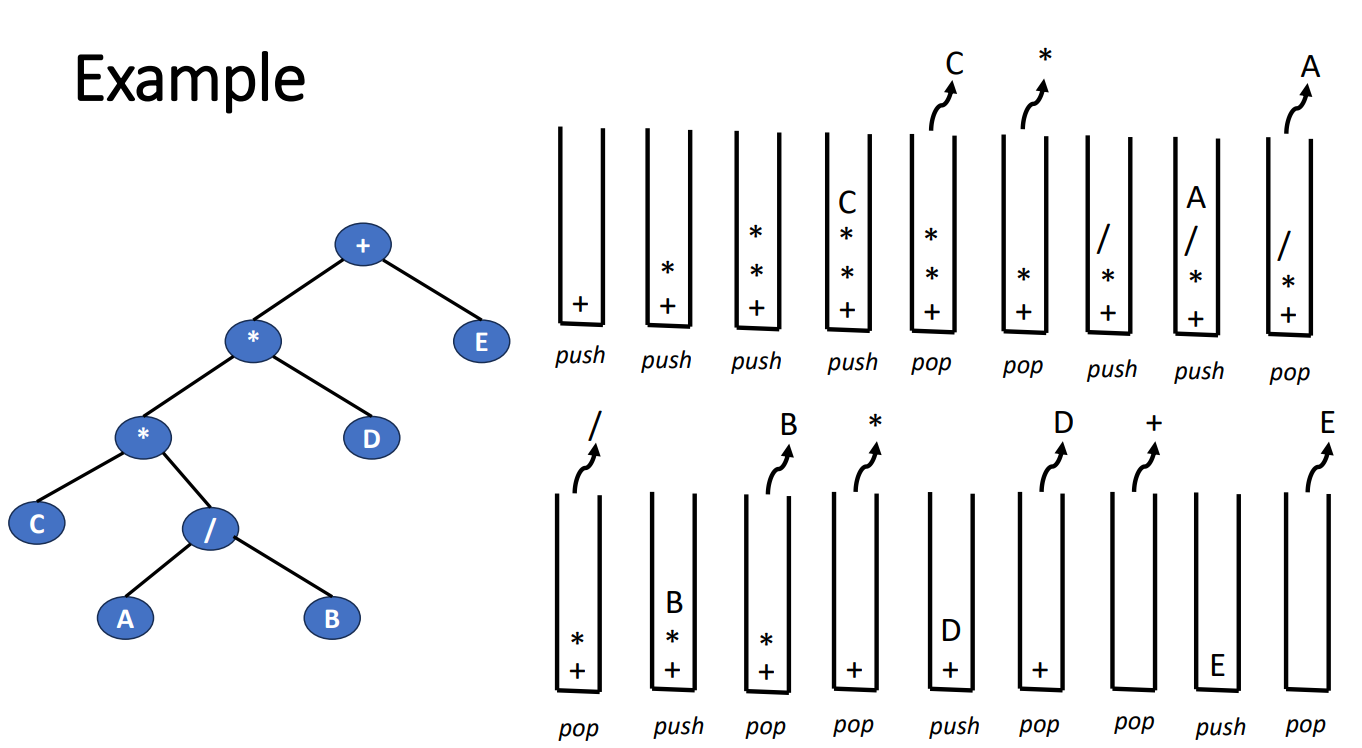

Iterative Traversal Using Stack

The following is an inorder example.

- Push root into stack

- Push the left into stack until reaching a null node

- Pop the top node from the stack

- If there’s no node in stack, break

- Push the right child of the pop-out node into stack

- Go back to step 2 from the right child

Here’s the graph to let you more understand the workflow.

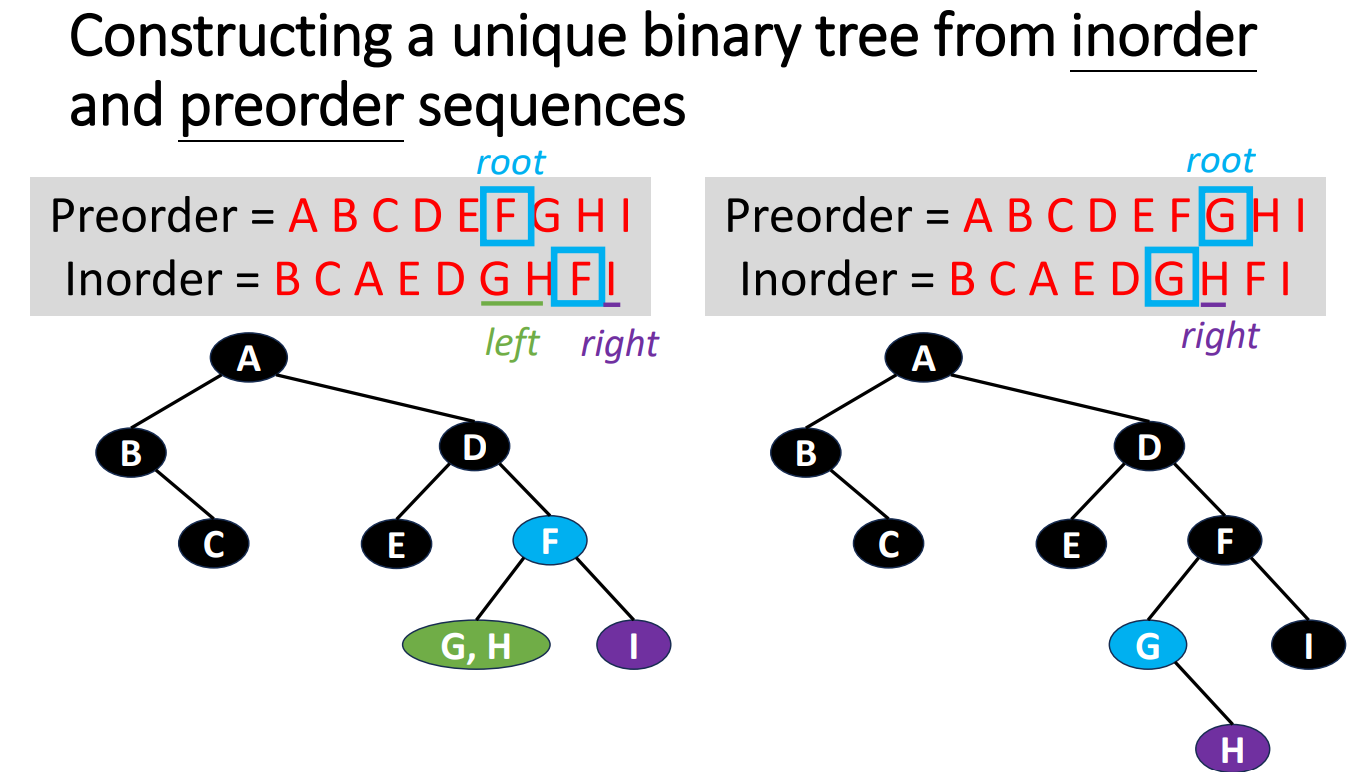

Convert Sequences Back to Trees

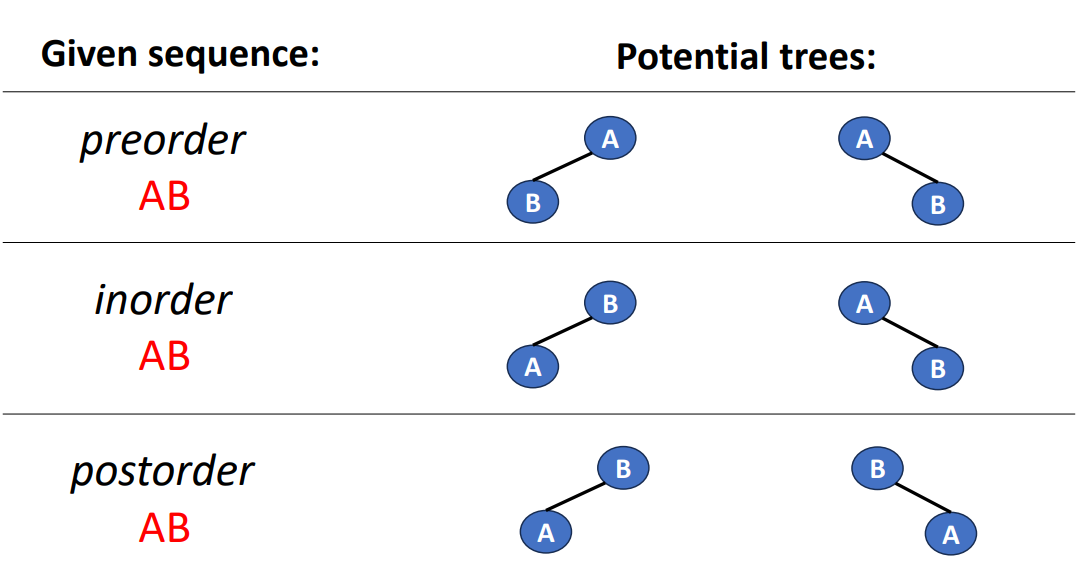

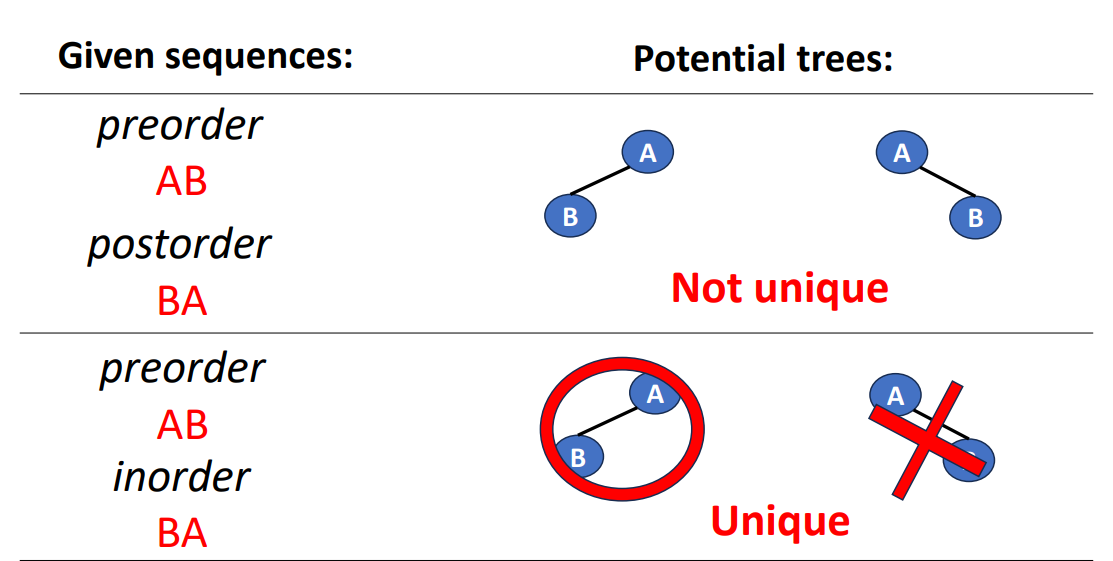

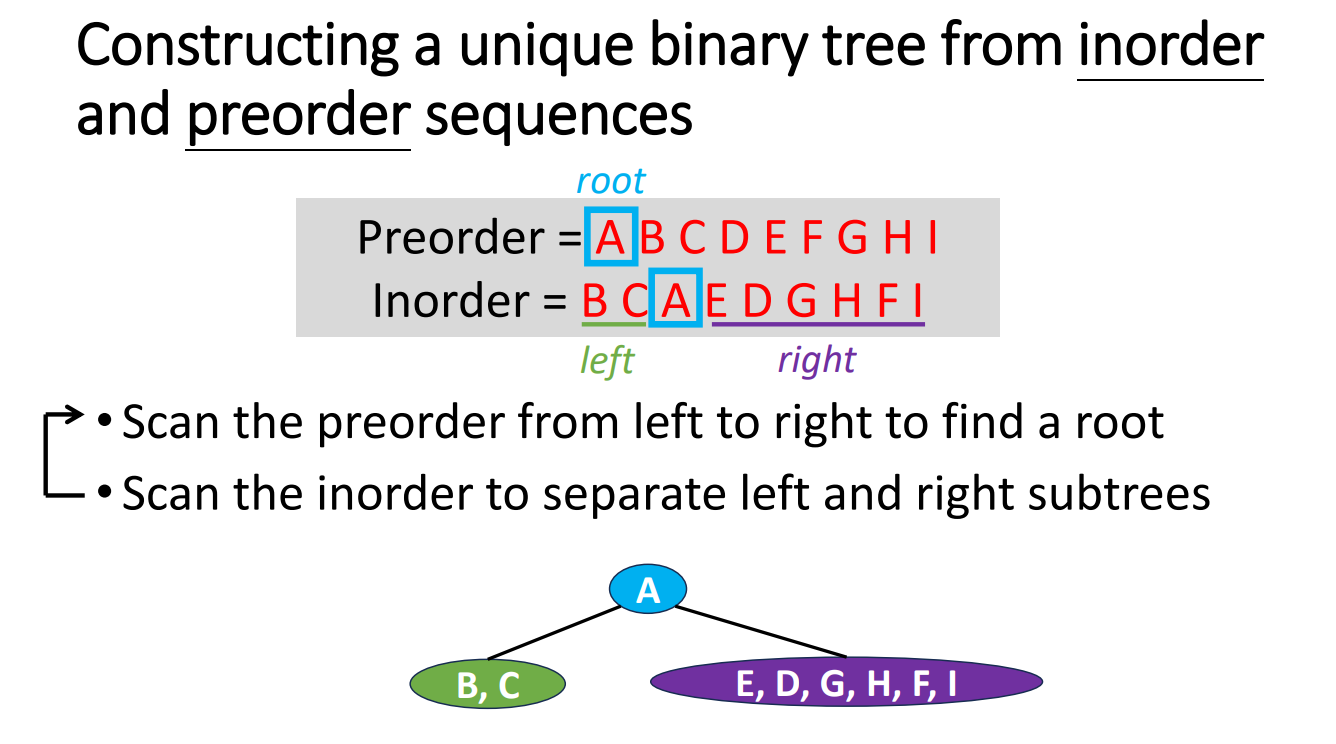

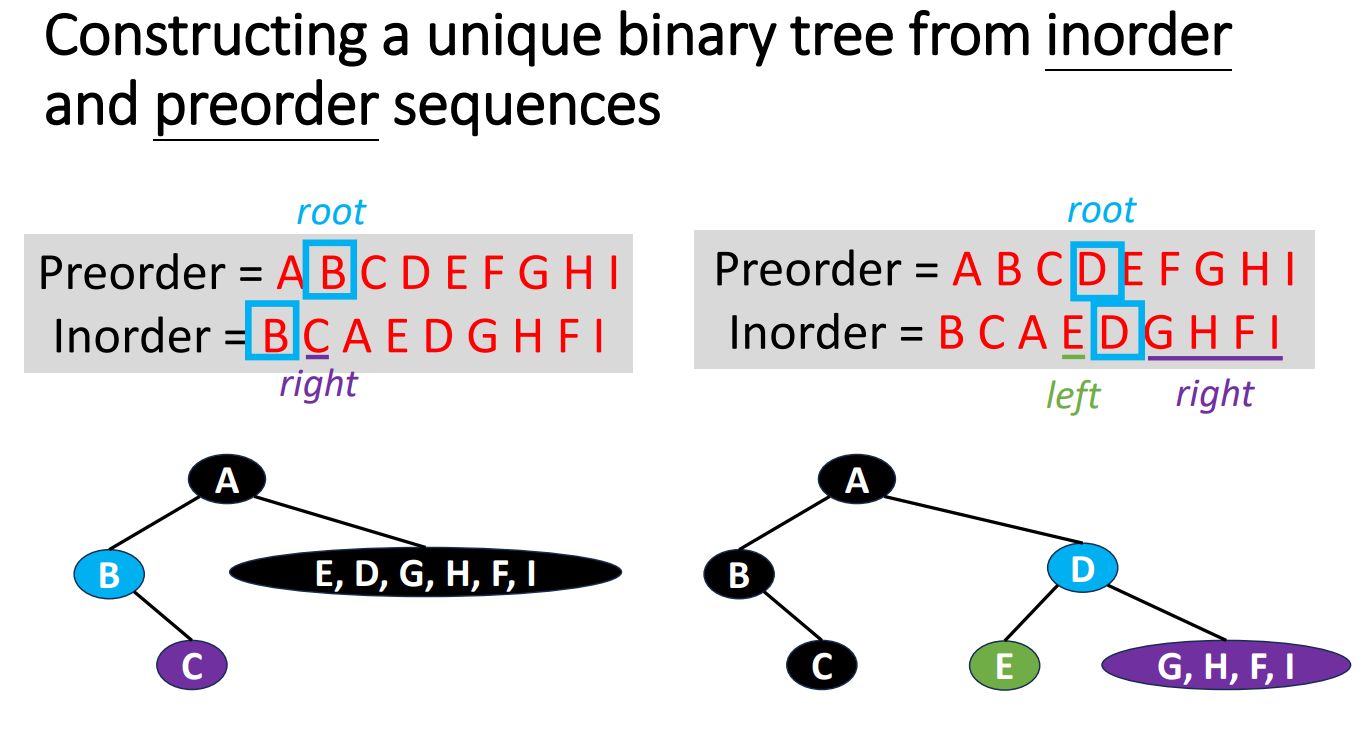

If we are given a sequence of data and we try to turn it back to a tree, how should we do? The following is an example of turnning a very short sequence data back into trees.

We can clearly see that if we are only given a sequence in 1 specific order, then we cannot know which side (left or right) is the original tree grows. But if we are given 2 different orders with 1 of them is inorder, then we can do it by the inference.

Binary Search Trees

Intro

The binary search tree, of course, it’s an binary tree. But beside this, it has some unique properties so that we can call it a binary search tree.

- Each node has a (key, value) pair

- Keys in the tree are distinct

- For every node

x- all keys in the left subtree are smaller than that in

x - all keys in the right subtree are larger than that in

x

- all keys in the left subtree are smaller than that in

- The subtrees are also binary search tree.

Operations

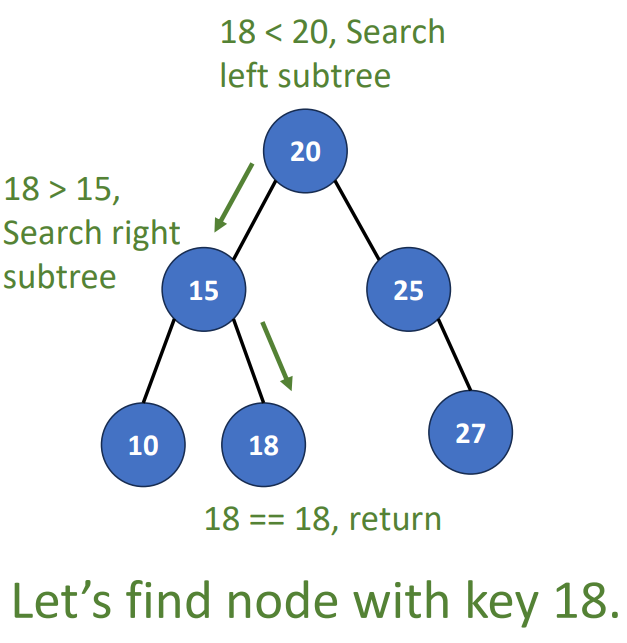

Search (root, key)

- k == root’s key, terminate

- k < root’s key, check left subtree

- k > root’s key, check right subtree

- Time complexity is , is number of nodes

Recursive

if (!root) return NULL;

if (k == root->data.key)

return root->data;

if (k < root->data.key)

return search(root->leftChild, k);

return search(root->rightChild,k)Variable space requirement: , is the number of nodes.

Iterative

while(tree);

if (k == tree->data.key)

return tree->data;

if (k < tree->data.key)

tree = tree->leftChild;

else

tree = tree->rightChild;

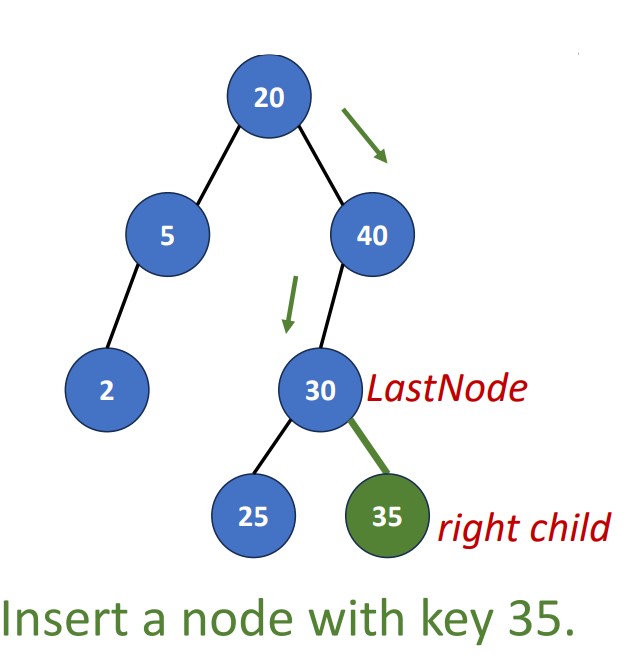

return NULLInsert(root, key, value)

- Search the tree

- Matched: Do nothing

- No match: Obtain

LastNode, which is the last node during the search.

- Add new node

- Create a new node with (key, value)

- If key > the key of

LastNode, add the new node as right child - If key < the key of

LastNode, add the new node as left child

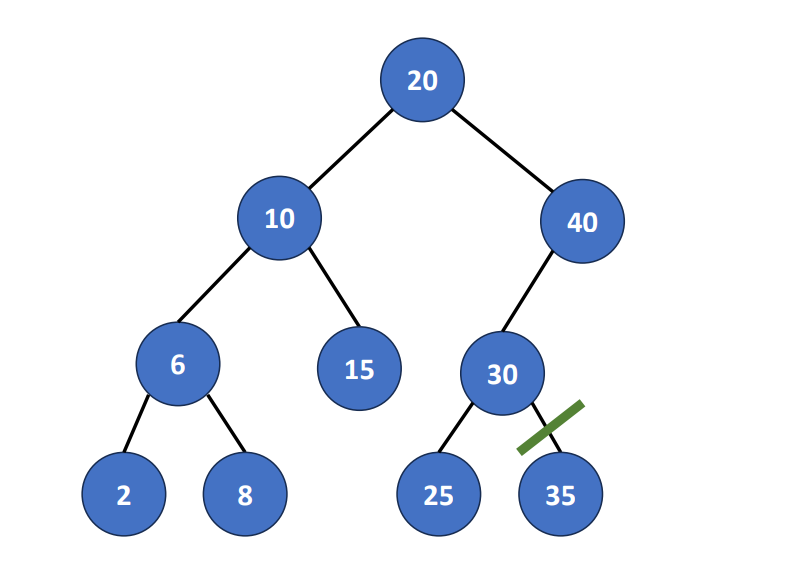

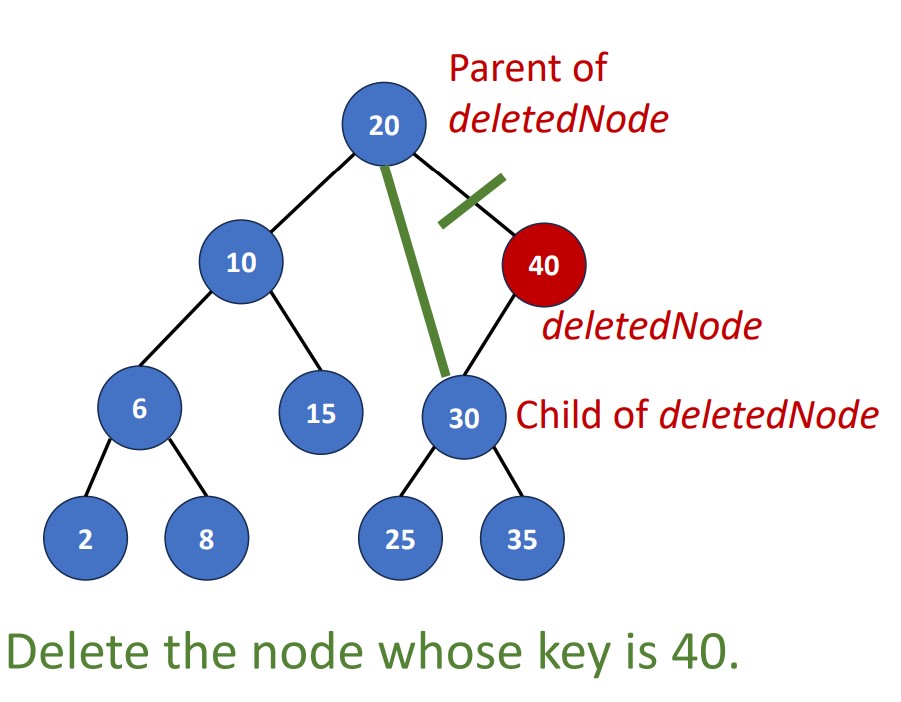

Delete (key)

There’re 4 cases in the deletion.

- No element with delete key

- Element is a leaf

- Element is a node

- Element is a node

Since the 1st case means we don’t do anything, we will start from the 2nd case.

Delete a leaf

Delete a Degree-1 Node

Link the single child of the DeletedNode to the parent of DeletedNode.

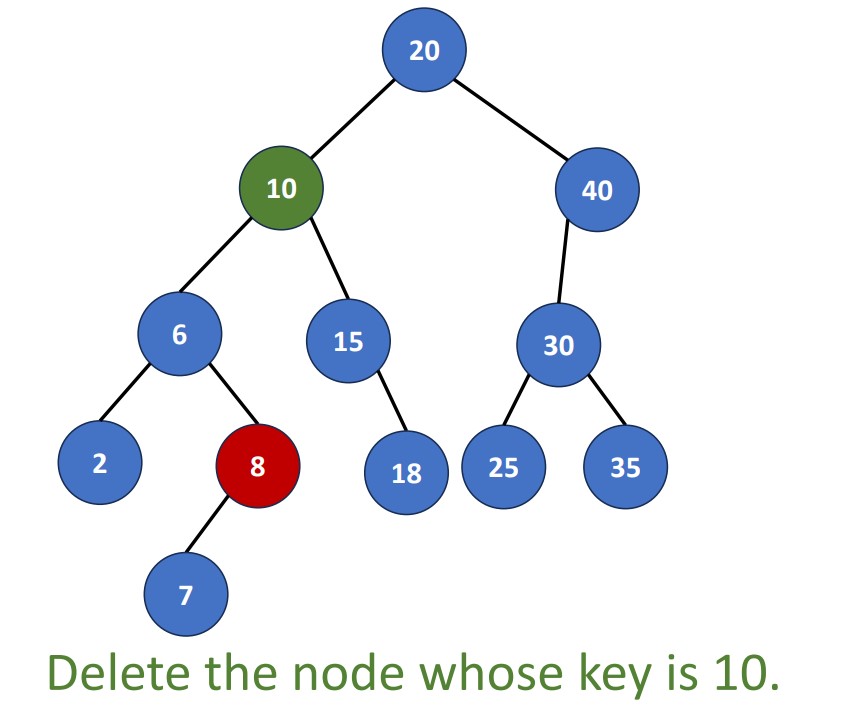

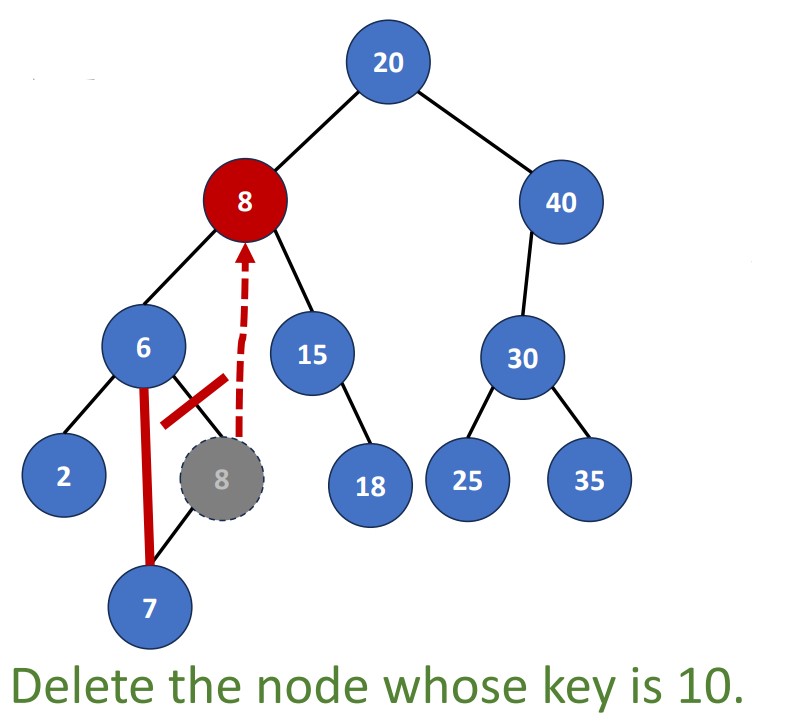

Delete a Degree-2 Node

There two ways to do it. We can replace the deleted node with

- Largest pair in its left subtree

- Smallest pair in its right subtree

These two pairs must be leaf nodes or degree-one nodes. Why?

Rank of a Node in a BST

Rank of node

- The number of the nodes whose key values are smaller than

- Position of inorder

- Like the index of an array

Heaps

Intro

Imagine that we have a program that records patients waiting in the hospital, if the data structure we use is a queue (FIFO), and a dying person comes, we cannot let him queue up to see the doctor first.

So that’s why we need a priority queue! But how to implement one? Let’s dive into the heaps right away!

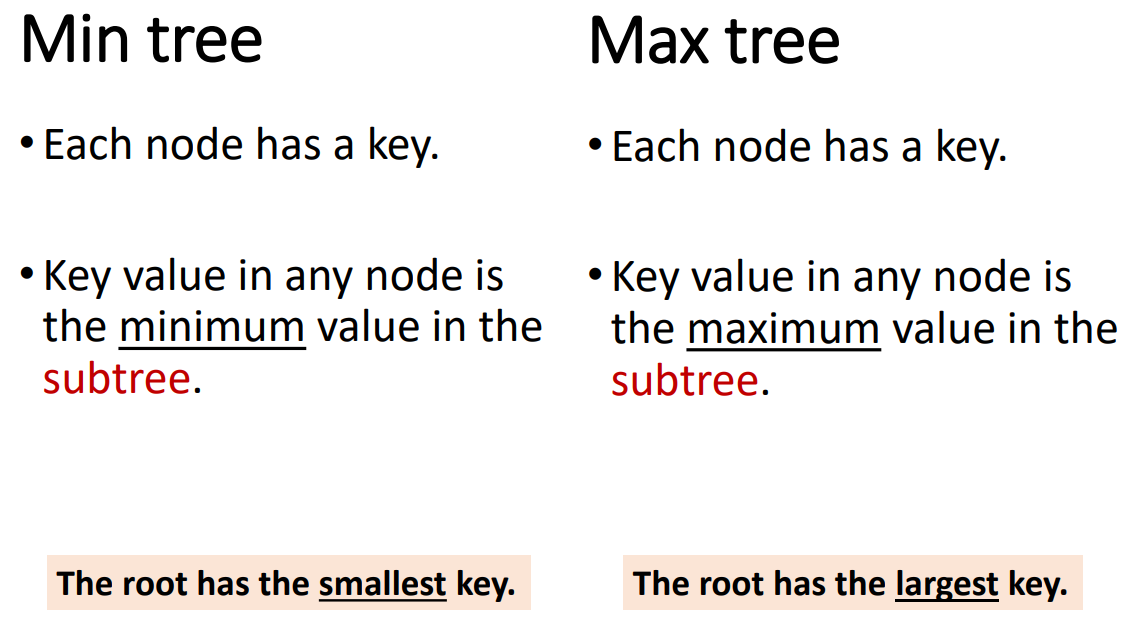

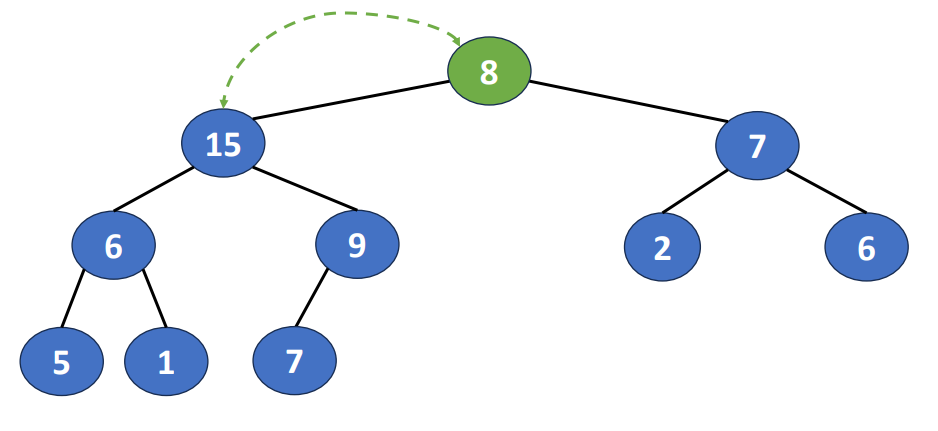

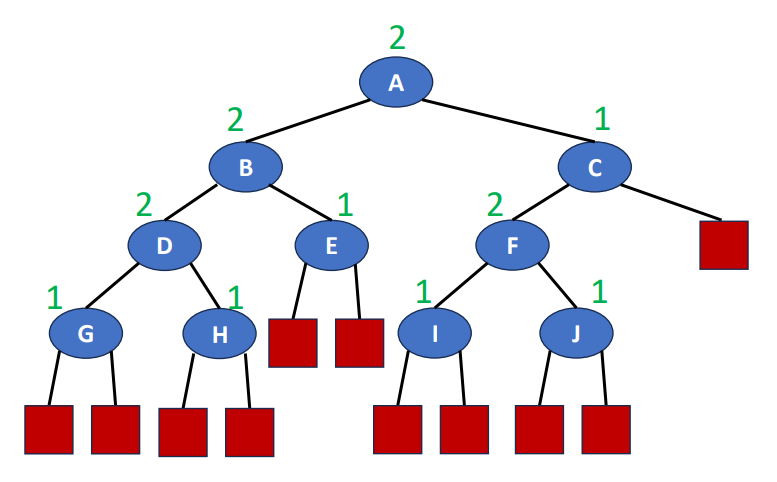

Min Tree & Max Tree

First, we should know what is min tree and max tree to establish the concept we will use later.

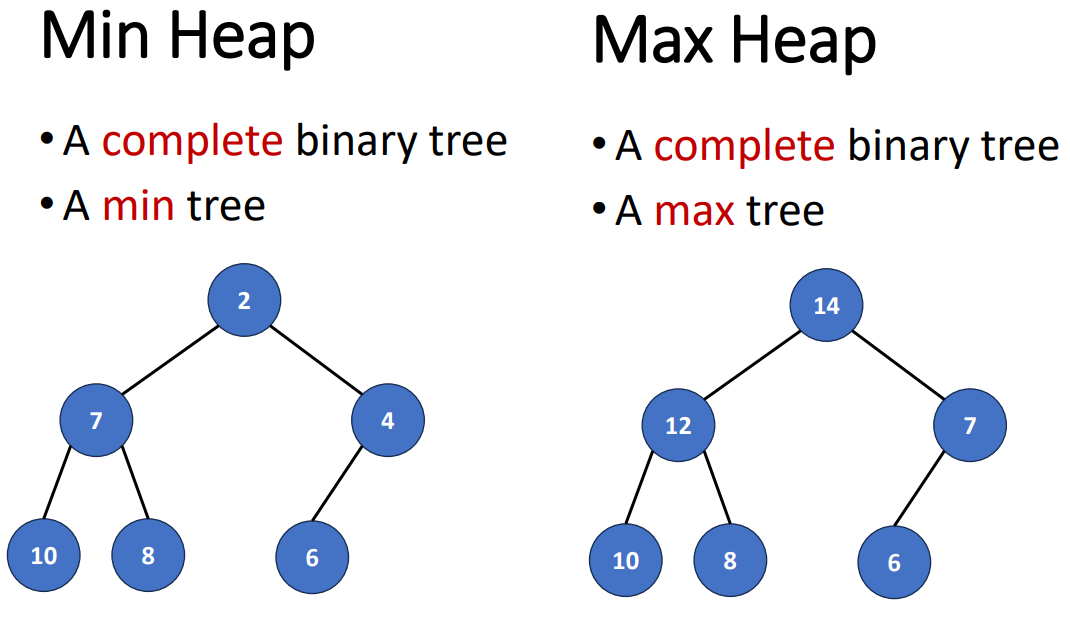

Min Heap & Max Heap

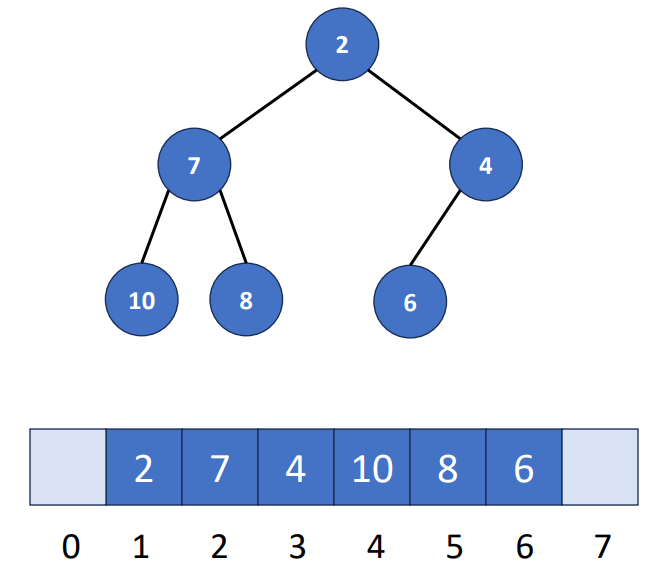

Array Representation

Operations

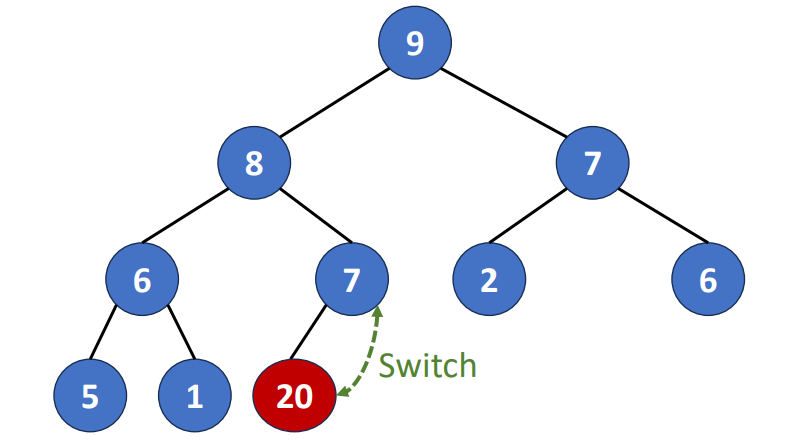

Here I use max heap for example, but it’s very similar with the min heap so you can try it by yourself! We’re going to use 2 operations called heapify up and heapify down.

- Heapify up

- While the new element has a larger value than its parent, swap the new element and its parent

- Heapify down

- While the “out of place element” has a smaller priority than any child, swap the out of place element with the smallest child

Insert(key)

- Create a new node while keep the heap being a complete binary tree

- Compare the key with the parent

- If the key < parent, pass

- If the key > parent, switch place with parent and recursively compare the parent with parent’s parent until it match the definition (key value in any node is the maximum value in the subtree)

This process is also called bubbling up process since it looks like a bubble goes up the water surface.

After we know how to insert things to a heap, we also care about it’s speed. The time complexity of the inseriton is , which is the heap size (height/level).

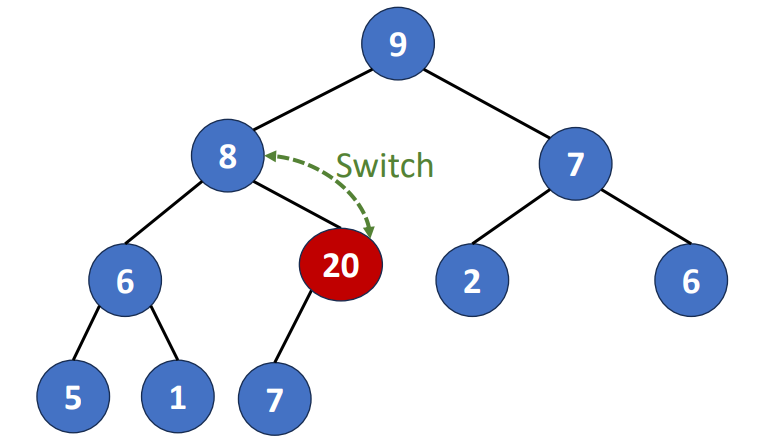

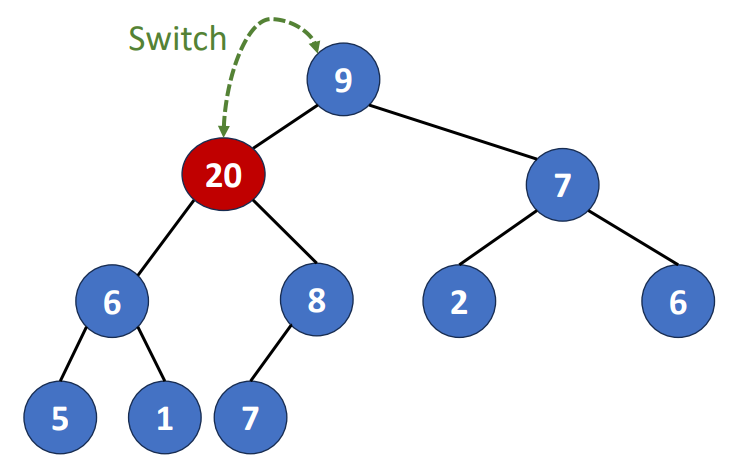

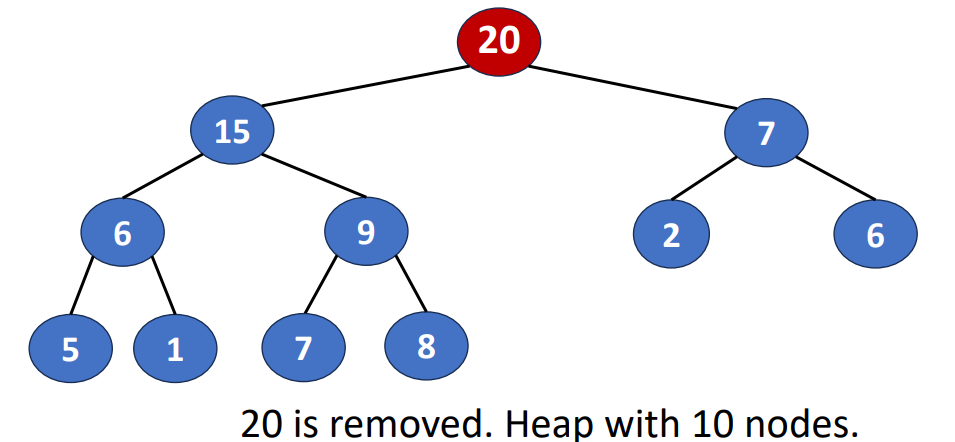

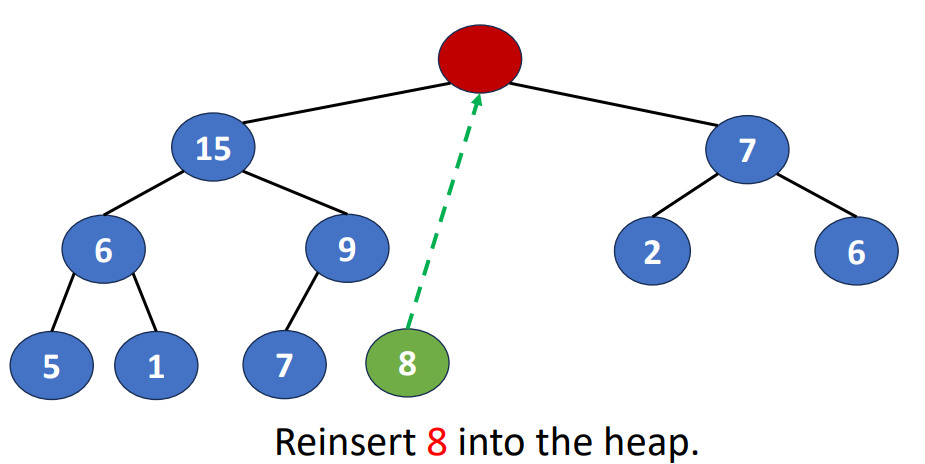

Delete() or Pop()

- Removing the root of the heap

- Root is the min element in a min heap

- Root is the max element in a max heap

Following is the steps to do this operation

- Removing the last node and inserting it into the root

- Moving the node to a proper position

- Find the child containing max key value and exchange the position

And the time complextity of deletion is , where is also the heap size.

Leftist Heaps (Leftist Trees)

Intro

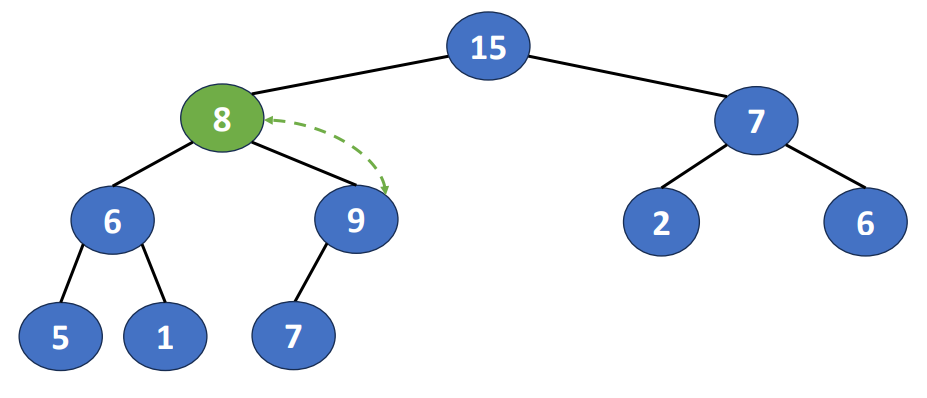

In previous part, we use the situation in hospital as an example to explain why we need priority queue, and now the situation becomes more complicated. In that hospital, 2 doctors are on call, just like the following graph.

But doctor1 becomes off duty, the 2 priority queues should be melded together for doctor2. To do this, we need the Leftist heaps!

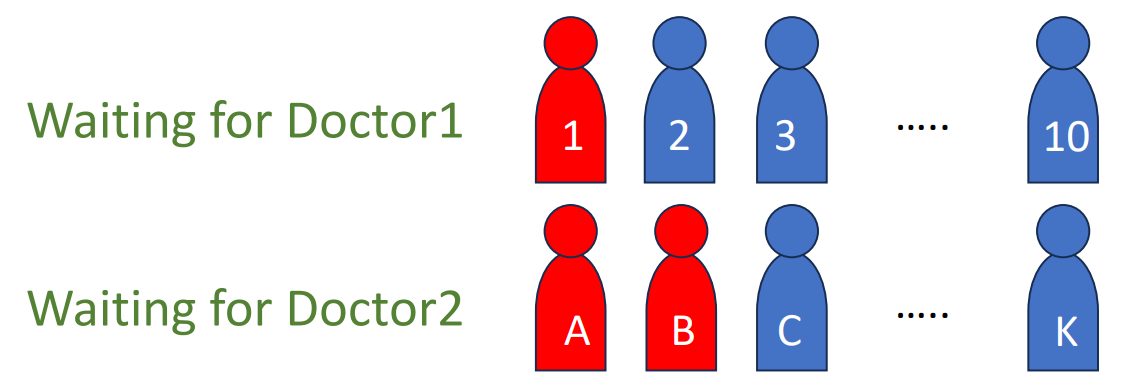

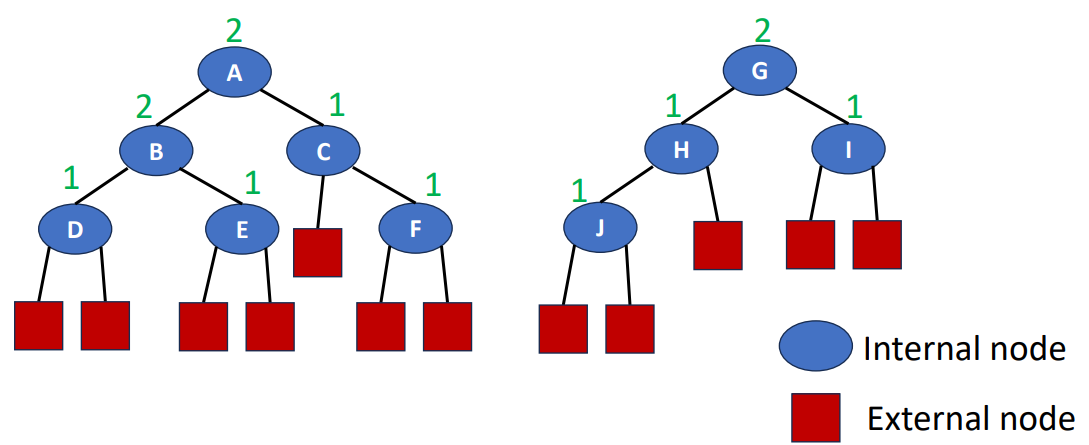

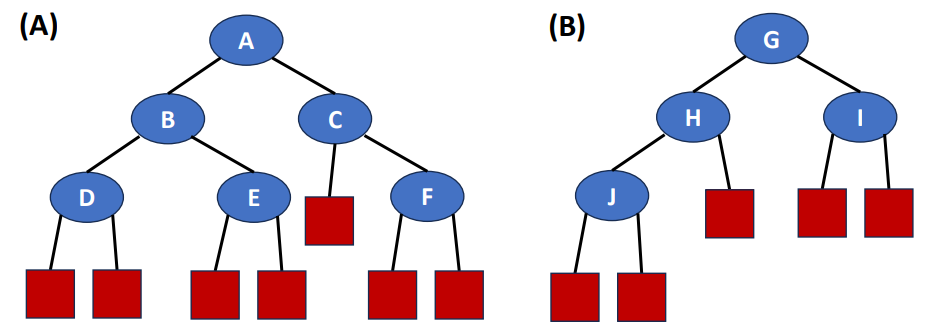

Extended Binary Trees

Before we step into the leftist tree, I need to introduce extended binary trees, a transformation of binary trees. To turn a binary tree into an extended binary tree, we just simply add an external node to every leaf in the original binary tree, then we obtained an extended binary tree! This is an example.

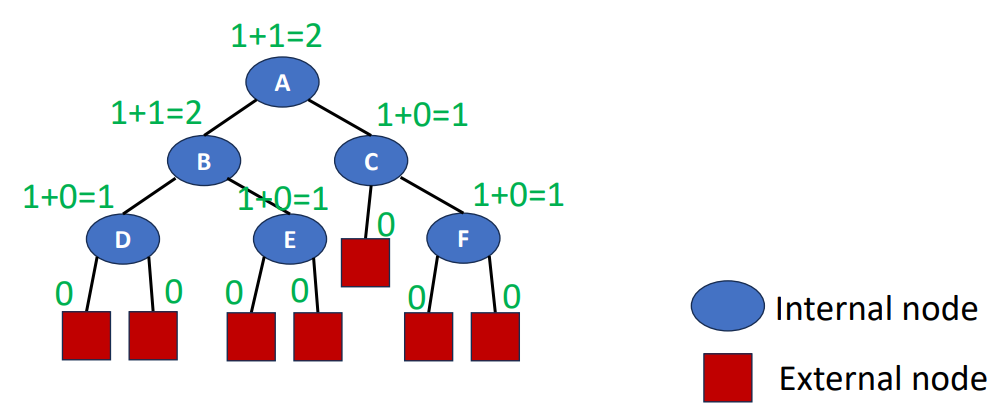

Function: Shortest(x)

Let’s say x is a node in an extended binary tree, then function shortest(x) is the length of a shortest path from x to an external node.

And here’s the formula for calculate the shortest(x) for all x belongs to internal nodes (since shortest(x) for all x in external nodes are 0).

Function: Count(x)

This one will be a little easier to understand. Count means the nubmer of internal nodes. So for all x, which x is an internal node, we can calculate count(x) by the following formula (since count(x) of all x in external nodes are 0).

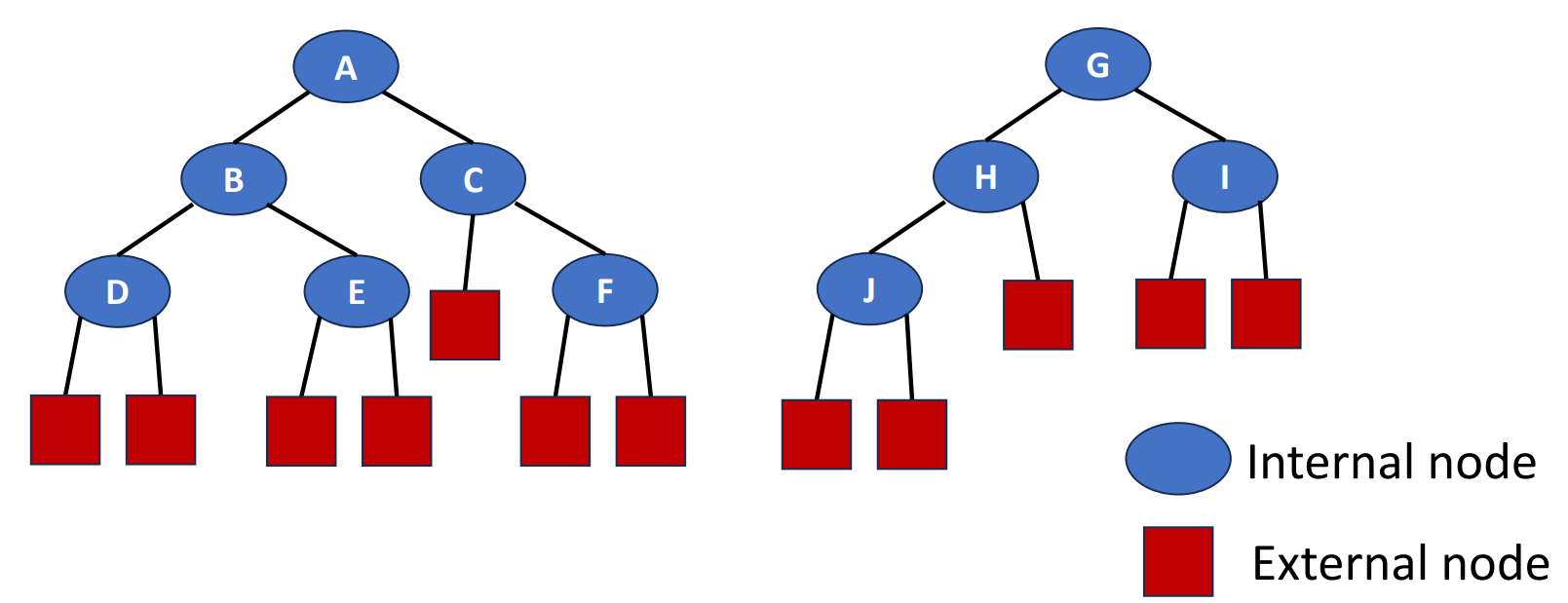

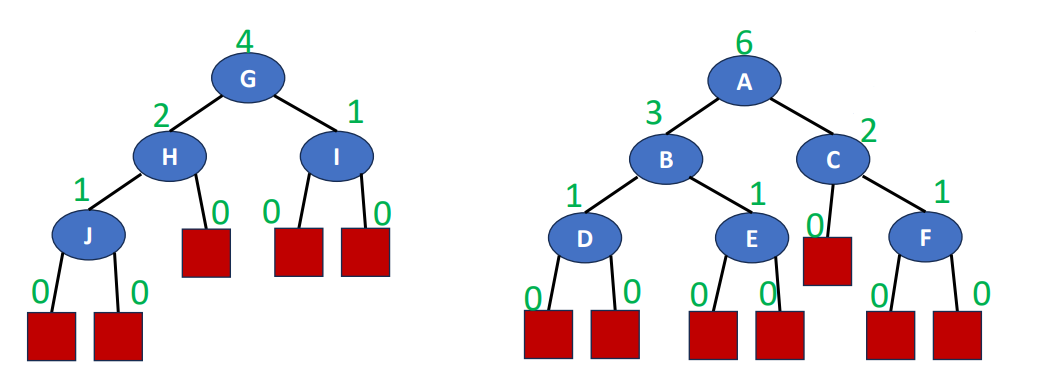

Height-Biased Leftist Trees (HBLT)

Following is the definitioan of a HBLT:

- An extended binary tree

- For every internal node ,

In the following graph, B is a HBLT while A isn’t.

Weight-Biased Leftist Trees (WBLT)

Following is the definition of a WBLT:

- An extended binary tree

- For every internal node ,

In the following graph, the left is a WBLT while the right isn’t.

Properties of Leftist Trees

0x00

- The rightmost path is a shortest from root to external node, that is

shortest(root).

0x01

- Number of internal nodes is .

That’s because the first shortest(root) level of nodes constitute a full binary tree, and for a shortest(root) level full binary tree, the total number of nodes is the following.

And that’s why must be larger than . Also, we can infer the following by this inequality.

0x02

- Length of rightmost path is , where is the number of nodes in a leftist tree.

Leftist Trees as Priority Queue

Remember the what I said in the first of this part? We need to make an priority queue that is easy to be merged with other one! Since we have learned min heaps and max heaps before, and leftist tree is actually a type of heap, we have the following types of leftist trees.

- Min Leftist Tree

- Leftist tree that is a min tree. Used as a min priority queue.

- Max Leftist Tree

- Leftist tree that is a max tree. Used as a max priority queue.

Operations

Given that the limited space, I will only use min leftist trees to be the examples, and all the operation here will be considered for min leftist trees. Nevertheless, they can all be applied to max leftist tree just by some modification.

Insert(x, T1)

- Create a min leftist tree

T2containing onlyx - Merge

T1andT2

Following is the animation of inserting a 13 into a min leftist tree.

DeleteMin(T)

- Get subtrees of root,

T_leftandT_right - Delete the original

root - Merge

T_leftandT_right

Following is the animation of deleting a minimum (6 in this case) from a min leftist tree.

Meld(T1, T2) or Merge(T1, T2)

- Phase 1: Top-down process

- Maintaining the property of min tree

- Going down along the rightmost paths in

T1orT2and comparing their roots - For the tree with smaller root, going down to its right child

- If no right child, attaching another tree as right subtree.

- Else, comparing again

- Phase 2: Bottom-up process

- Maintaining the property of leftist tree

- Climbing up through the rightmost path of the new tree

- If not meet the definition of a leftist tree (HBLT or WBLT), interchange the left and right subtrees of the node

- Time Complexity is

- Length of rightmost path is , where is the number of nodes in a leftist tree

- A merge operation moves down and climbs up along the rightmost paths of the two leftist trees.

- is number of total elements in 2 leftist trees

The following is the animation of deleting a minimum from a min leftist tree, but please focus on the merge operation.

Initialize()

- Create single node min leftist trees and place them in a FIFO queue

- Repeatedly remove two min leftist trees from the FIFO queue, merge them, and put the resulting min leftist tree into the FIFO queue

- The process terminates when only 1 min leftist tree remains in the FIFO queue

- Time complexity is

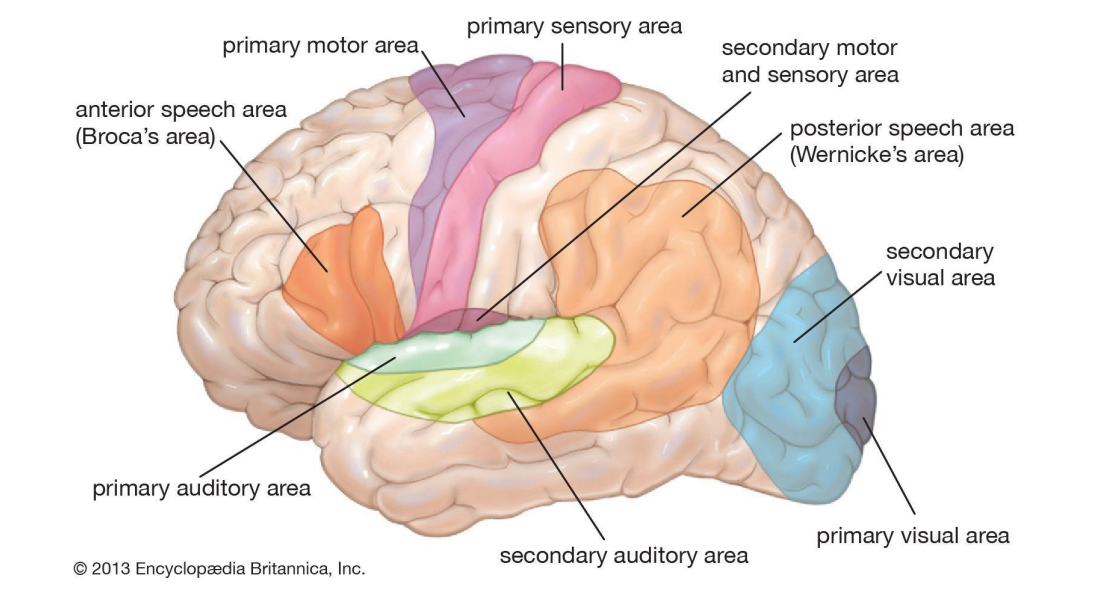

Disjoint Sets

Intro

Let’s forget about the hospital scene, you are now a police officer. You know the relationship of 10 gangster, and No.1 & No.9 are suspects. How do you tell if they’re in a same gang? Let’s why we need disjoint sets! We can use this data structure to store the data like this.

There should Dbe no element appears in 2 different sets in the disjoint sets, which means a unique element should only appear once. Here are 2 examples.

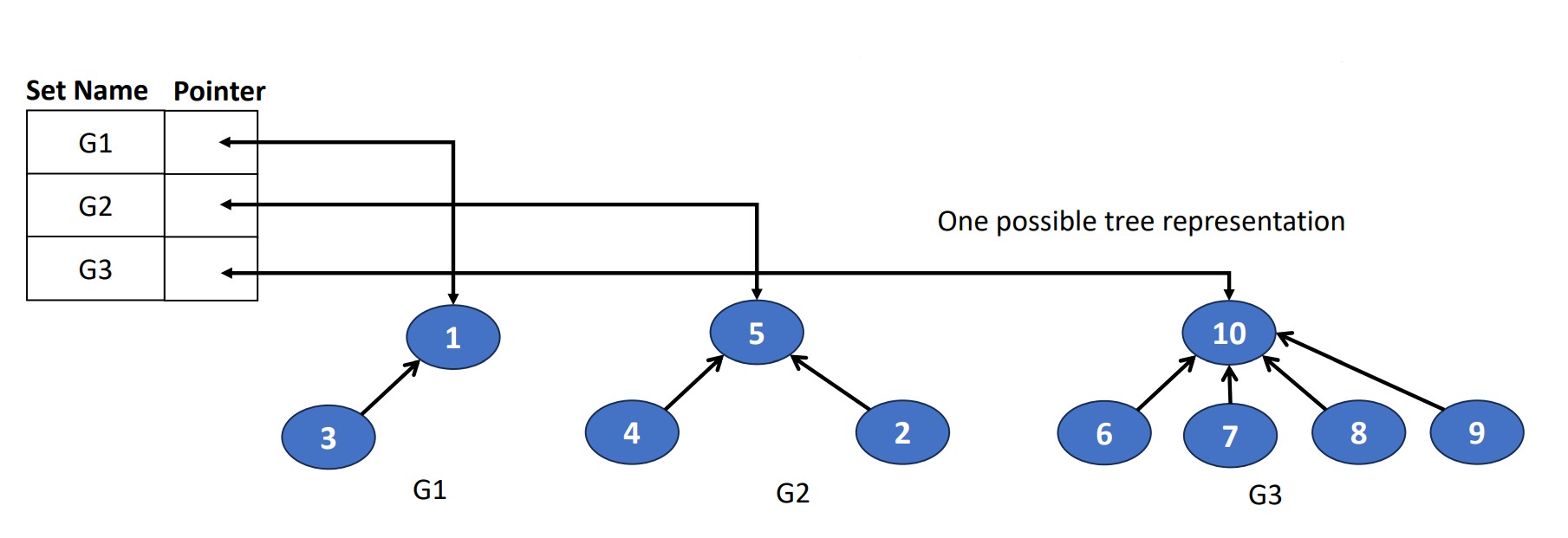

Data Representation of Disjoint Sets

To represent a disjoint set, we link the node from the children to the parent, and each root has a pointer to the set name.

Operations

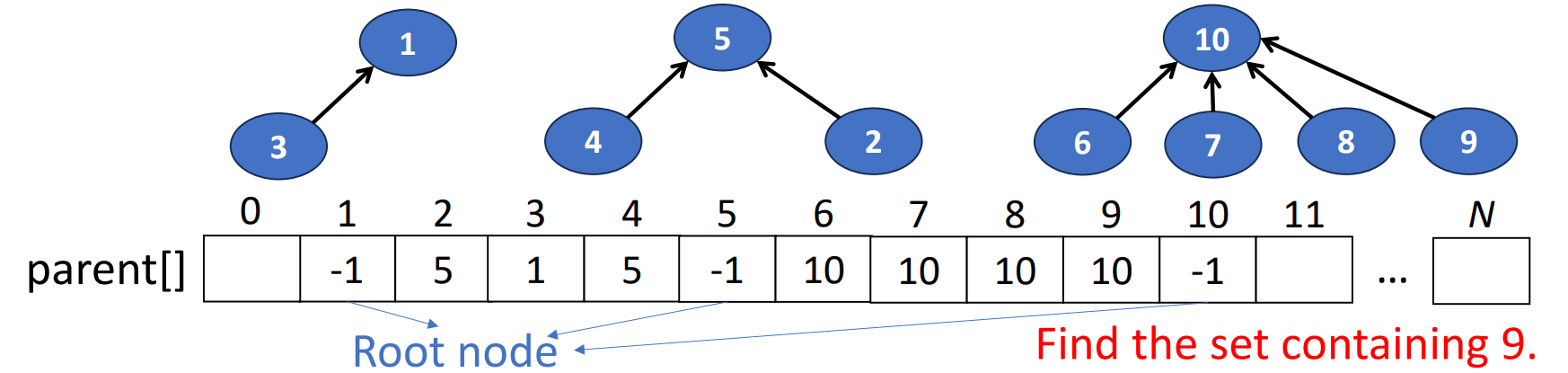

Find(i)

- Find the set containing the targeted element

- Start at the node representing element and climb up the tree until the root is reached. Then return the element in the root

- Using an integer array to store the parent of each element

- Time complexity depends on the level of

while (parent[i] >= 0){

i = parent[i]; // move up the tree

}

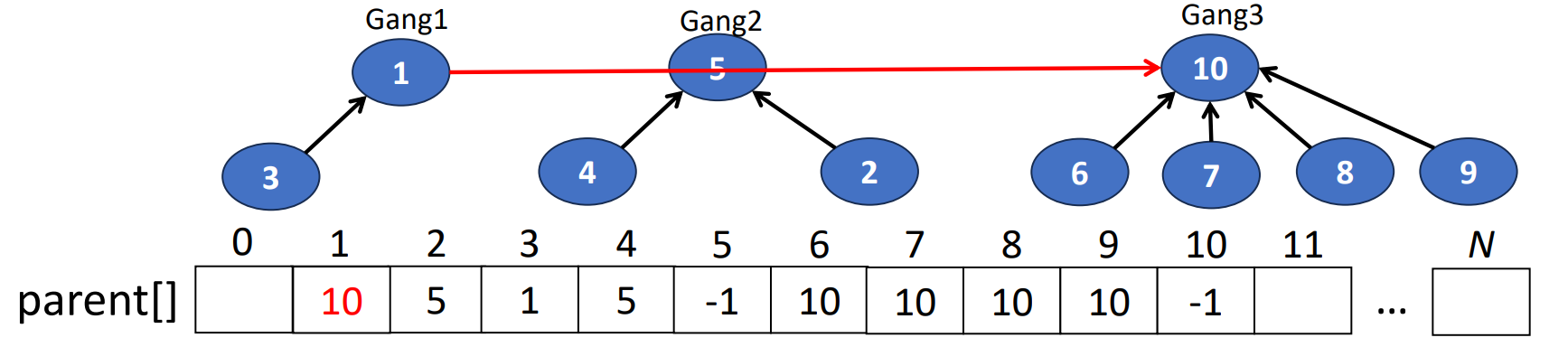

return i;Union(i, j)

- Combine 2 disjoint sets into 1

- & are the roots of different trees, so

- For tree representation, we set the parent field of one root pointing to the other root

- Which is making one tree as a subtree of the other.

parent[j] = i - Time complexity is

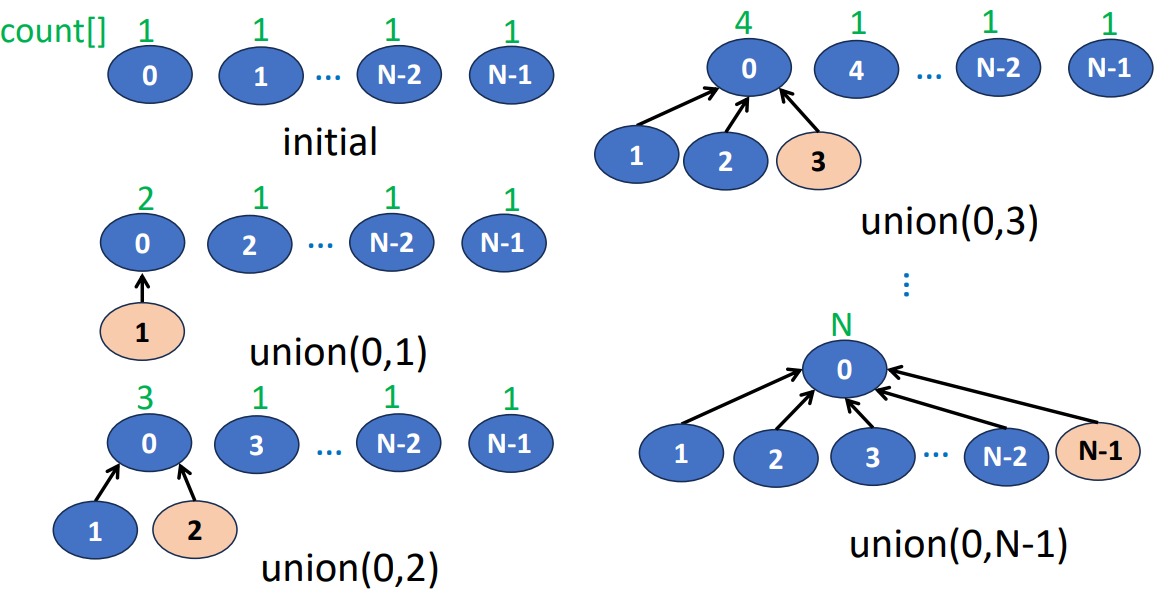

Sequence of Union-Find Operations

For each find(0), we trace from 0 to the root.

- Time complexity

- Time to initailize

parent[i] = 0for all elements is - times of

union(), each time takes , so total is - times of

find(), each time takes , so total is

- Time to initailize

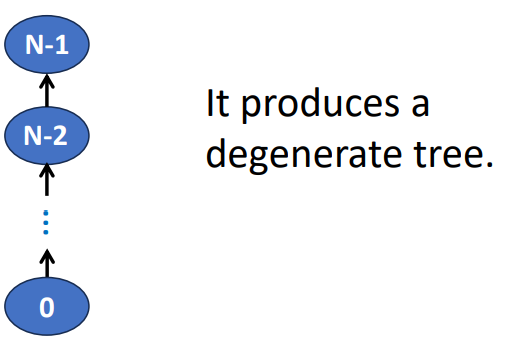

How to avoid the creation of degenerate tree? Let’s see in next part of this chapter!

Weight Rule for Union(i, j)

- Make tree with fewer number of elements a subtree of the other tree

- The count of the new tree is the sum of the counts of the trees that are united

Height Rule for Union(i, j)

- Make tree with smaller height a subtree of the other tree

- The height increases only when two trees of equal height are united

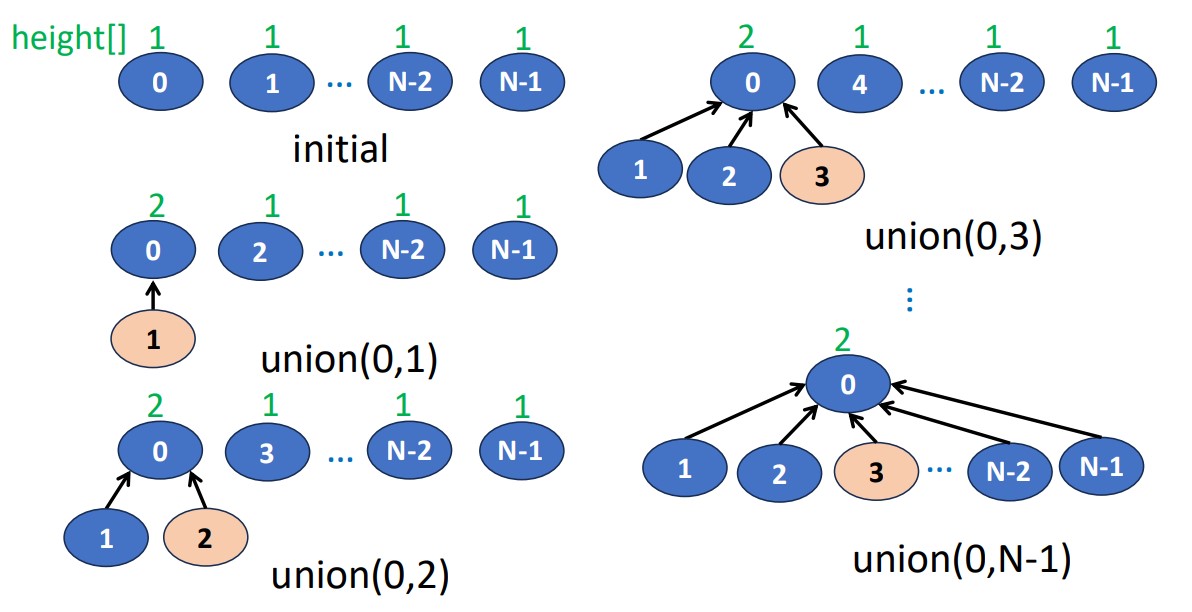

Collapsing Rule

Lemma 1

Suppose we start with single element trees and perform unions using either the height rule or the weight rule

The height of a tree with element is at most

Processing an intermixed sequence of unions and finds, the time complexity will be

unions part, we generate a tree with nodes

finds part, it requires at most

Improving Find(i) with Collapsing Rule

Collapsing rule means:

- Make all nodes on find path point to tree root

- Pay some more efforts this time, but the

Find()operations in the future could save time - Slower this time, faster next time

Here’s an example, if we have a sequence to this tree.

- Without collapsing rule

- A requires climbing up 3 times

- Total is moves

- With collapsing rule

- The first requires climbing up 3 times

- The remainding only needs to climbing up once

- Total is moves

Lemma 2 (By Tarjan and Van Leeuwen)

Let be the maximum time required to process any intermixed sequence of finds and unions. Assuming that

where and are constants, is the number of elements, and is inverse Ackermann function.

- Ackermann function

- A function of 2 parameters whose value grows very, very fast

- Inverse Ackermann function

- A function of 2 parameters whose value grows very, very slow

Time Complexity

Those bounds in Lemma 2 can be applied when we start with singleton sets and use either the weight or height rule for unions and collapsing rule for a find. The time complexity will be , which is very efficient.

Graphs

Intro

Now, let’s talk about the brains of the humanities. In out brain, each region is associated with different functions. Just like the graph below.

But the works in reality are complicated, so we usually use multi-part of out brain to achieve a task. That’s what we can represented by a graph. It will be easier for us to visualize and further analysis.

Graph can be represented as this form.

- : Set of vertices

- Vertices are also called nodes or points

- : Set of edges

- Each edges connected 2 different vertices

- Edges are also called arcs & lines

- In undirected graph, and represent the same edge

- In directed graph, and represent different edges

With graph, we can do a lot of applications, such as planning a route from city A to city B, witch we can let vertices to be cities, edges to be roads and edge weight to be the distances or times we need. Now, let’s go to the next part and know more terms of graph.

Terminology

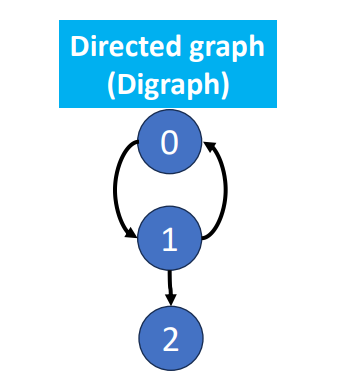

Directed Graph

There’ direction between the connections of the vertices.

Undirected Graph

There’s no direction between the connections.

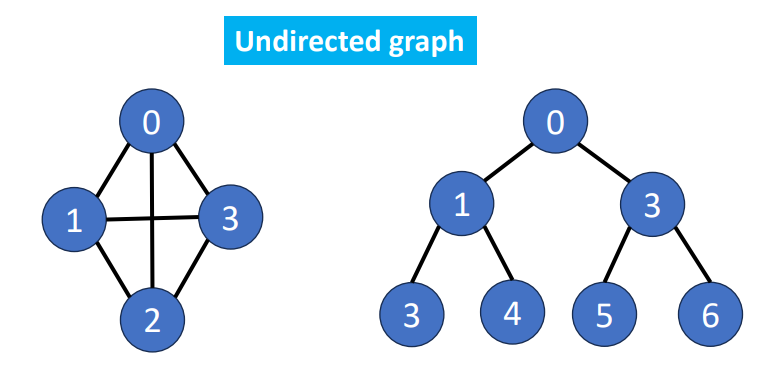

Subgraph

A graph that is composed of a subset of vertices and a subset of edges from another graph.

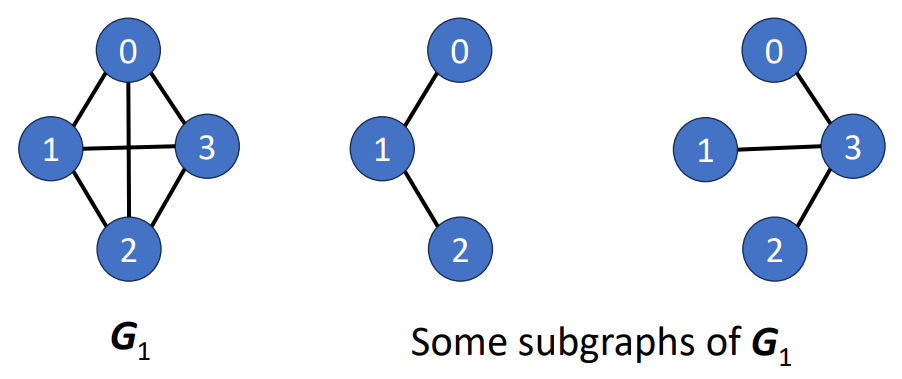

Path

Length of a path is the number of edges on it.

Simple Path & Cycle

- SImple Path

- No repeating vertices (except for the case that the first and the last are the same)

- Cycle

- Simple path

- The first and the last vertices are the same

Connection

- 2 vertices and are connected if and only if there’s a path from to

- Connected component

- A maximum subgraph that are connected to each other

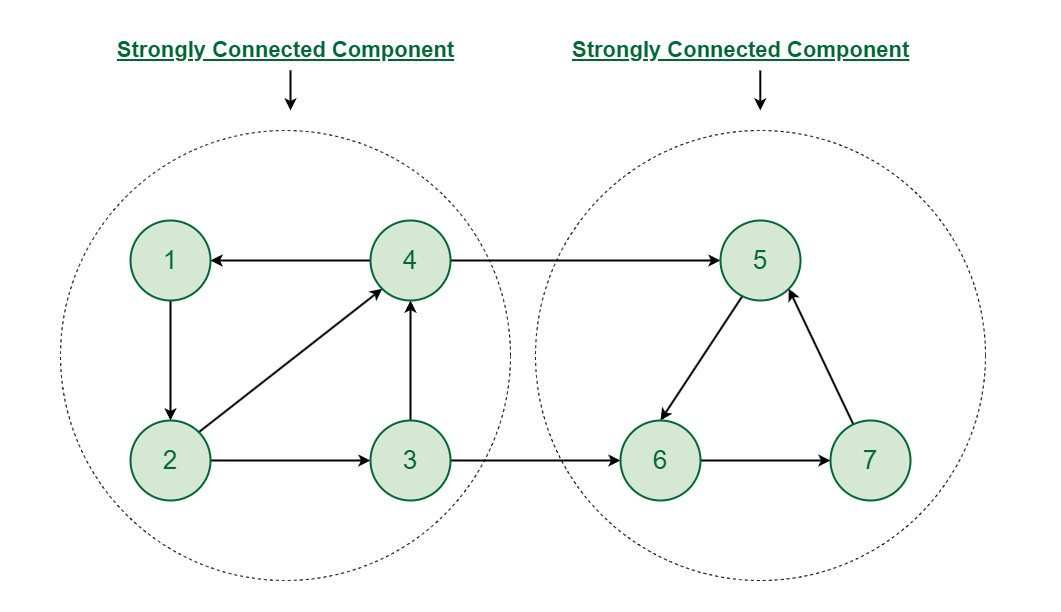

- Strongly connected component

- In a direct graph, every pair of vertices and has a directed path from to and also from to

Complete Graph

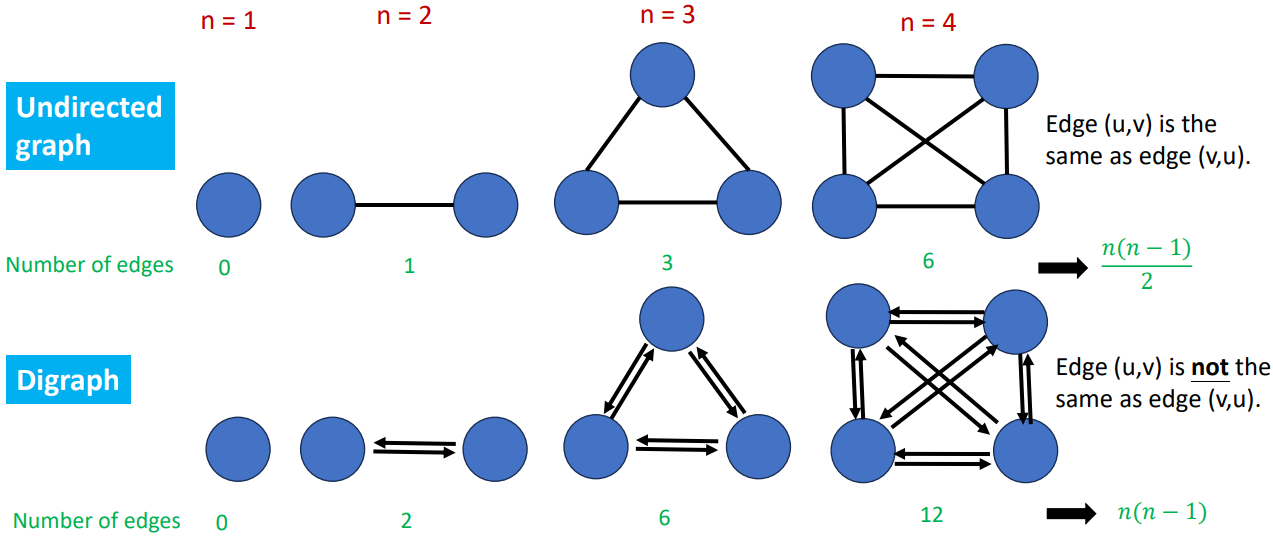

- Having the maximum number of edges

Properties

Number of Edges

If we set to be the number of vertices and to be the number of edges, then

- Undirected Graph

- Directed Graph

Vertex Degree

- Number of edges incident to vertex

- Directed graph

- In-degree, which is pointed into the vertex

- Out-degree, vice versa

Sum of degree

The number of edges is , then

- Undirected Graph

- Since each edges contributes to vertex degree

- Directed Graph

Trees & Spanning Trees

Tree

- Acycilc Graph

- A graph with no cycles

- Connected Graph

- All pairs of nodes are connected

- vertices connected graph with edges

Spanning Tree

- A tree

- A subgraph that includes all vertices of the original graph

- If the original graph has vertices, then the spanning tree will have vertices and edges

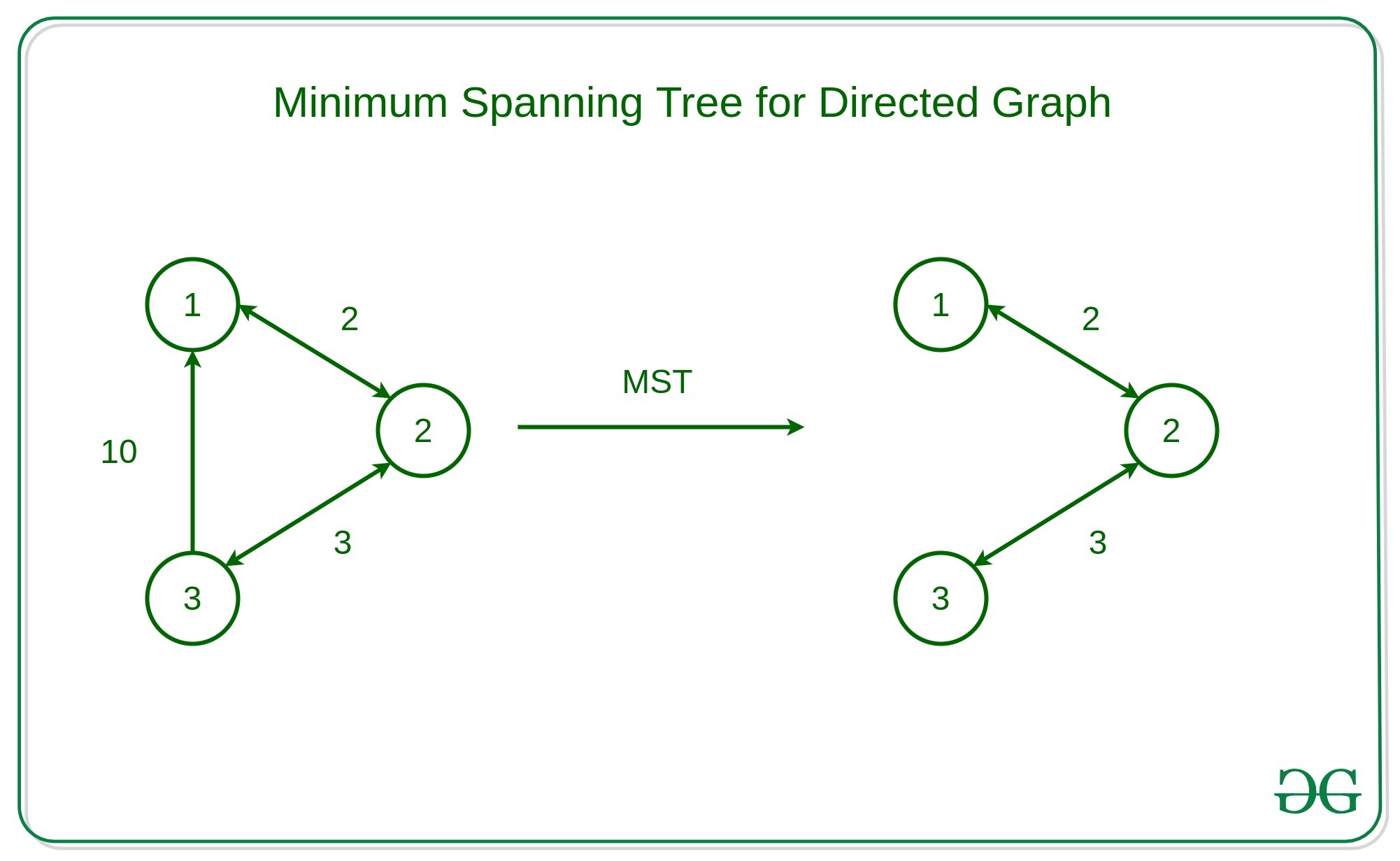

Minimum Cost Spanning Tree

- A spanning tree with least cost (or weight)

- Tree cost means the sum of edge costs/weights

Graph Representaions

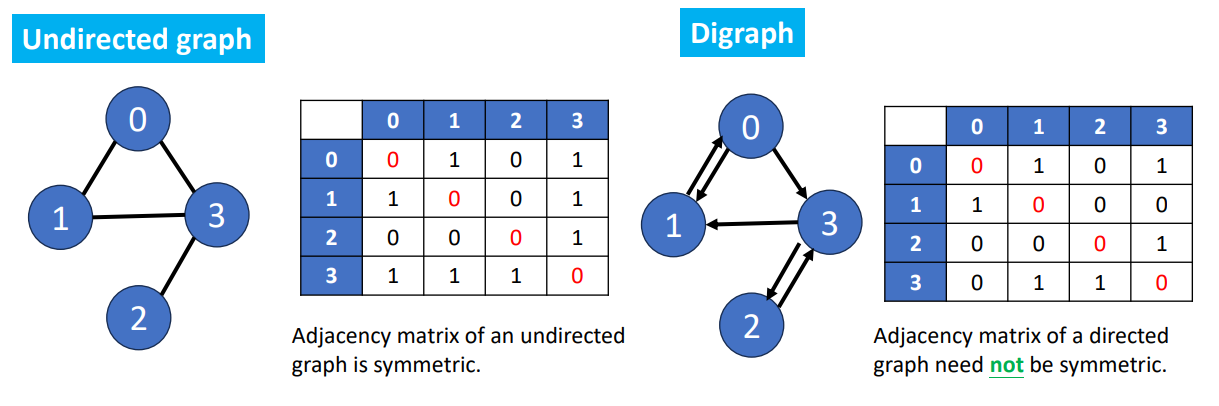

Adjacency Matrix

- Using 2-Dimensional by matrix

- is number of vertices

- means there to be an edge between vertices and

- Diagonal entries are all

Traversing this matrix will have the time complexity , it seems to be okay in a small matrix. But what if the matrix is large and sparse? There’s only a little 1s in the matrix, which are the things we care (cause we don’t care about the zeros), how can we improve the time complexity? We can use other ways to represent an graph.

Linked Adjacency List

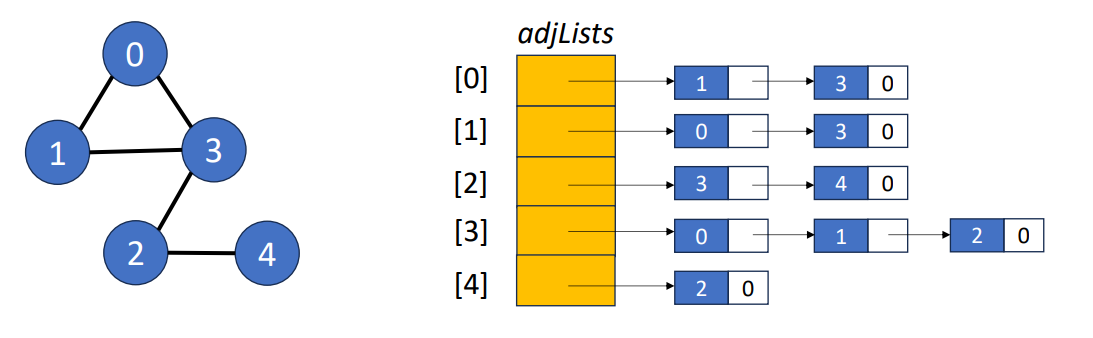

Undirected Graph

- Each adjacency list is a chain. One chain for each vertex

- Array length will be

- The data field of a chain node store an adjacency vertex

- In undirected graph, total number of chain nodes will be

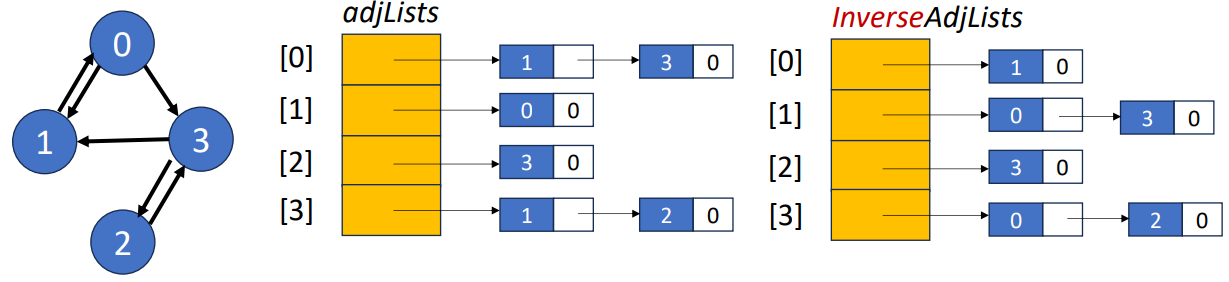

Directed Graph

- Total nubmer of chain nodes is

- The data field of a chain node store an adjacent vertex

- In inverse linked adjacency list, the data field of a chain node store the vertex adjacent to the vetex it represents

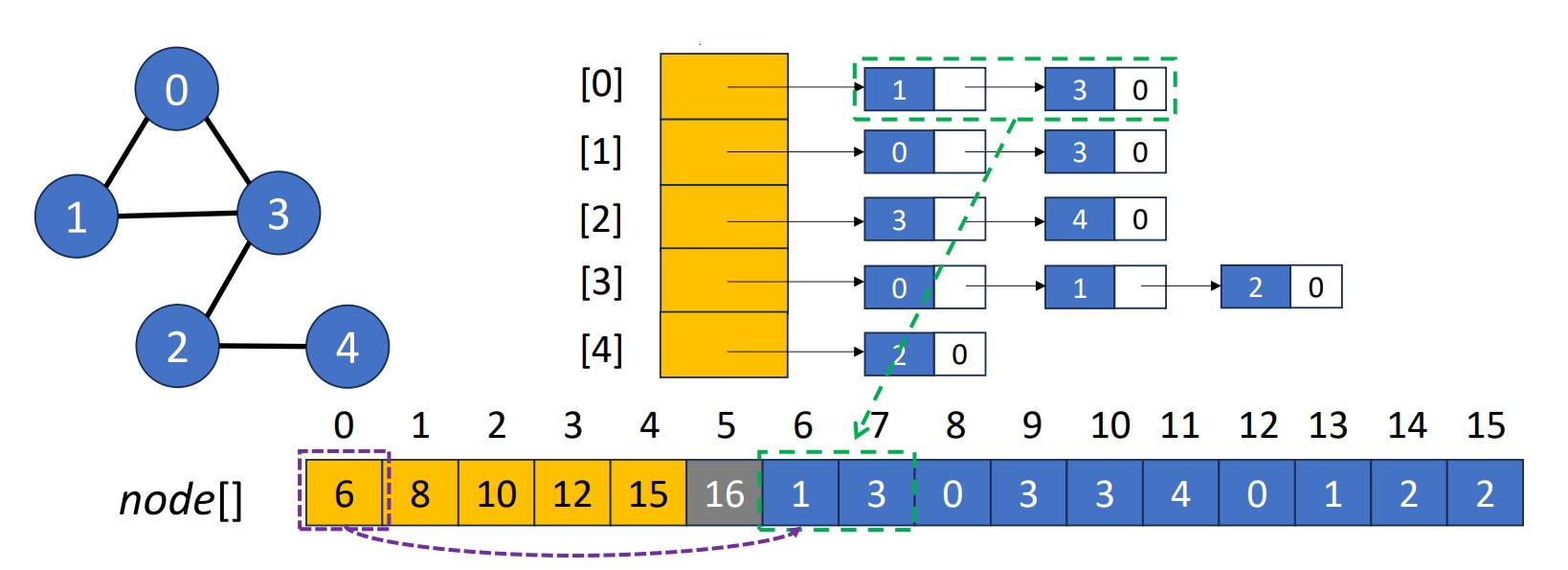

Array Adjacency List

- Using an integer array

node[]to store all adjacency lists- Array length will be

- The element in

node[0, 1, 2, ..., n-1]is the starting point of the list for vertex

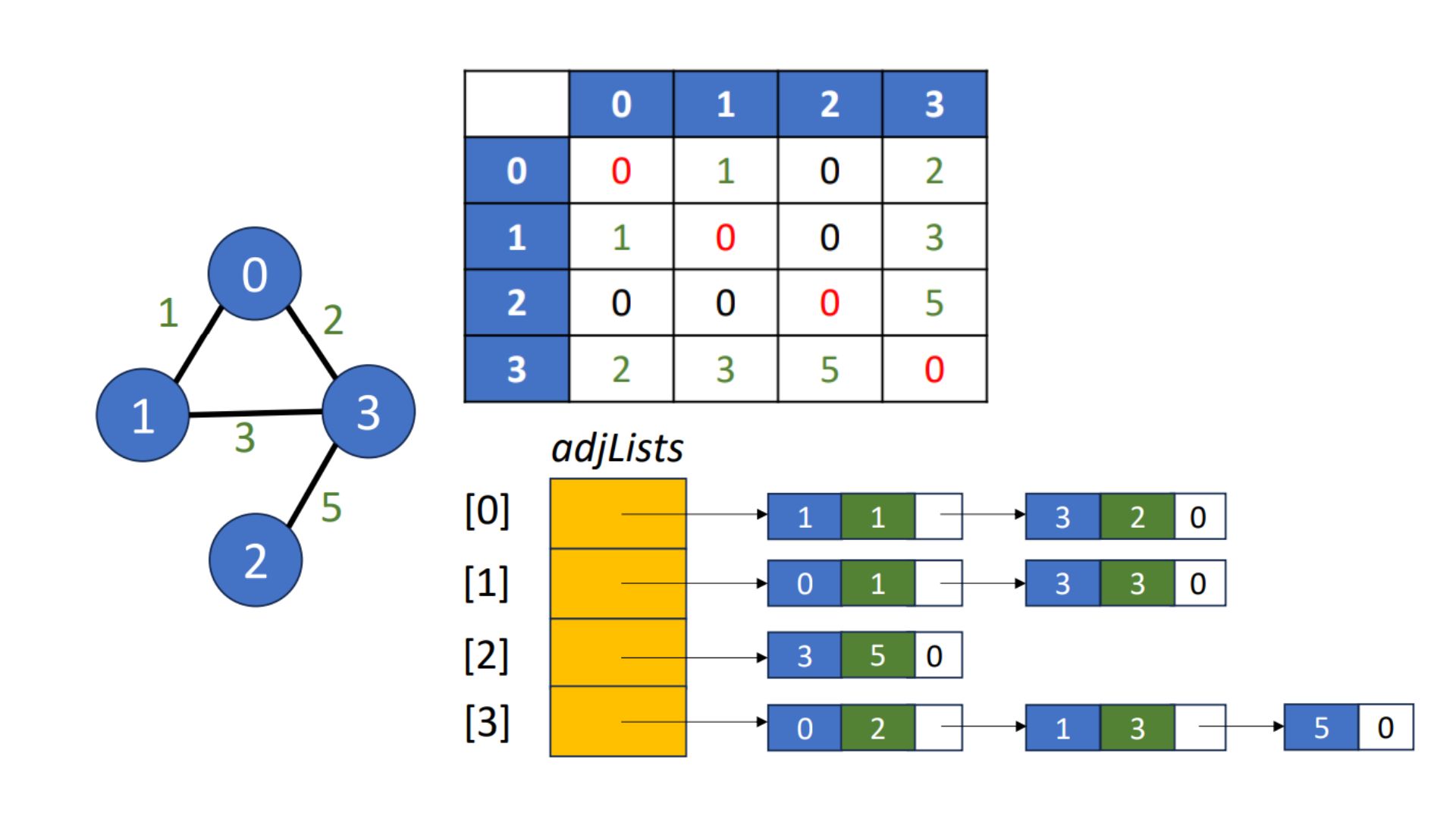

Weighted Graphs

- Weighted adjacency matrix

- is cost of edge

- Weighted adjacency list

- Each element is a pair of adjacent vertex & edge weight

- A graph with weighted edges is called a network

DFS & BFS

To search element in a graph, we need to introduce 2 algorithms, depth first search and breadth first search.

- Depth First Search (DFS)

- Similar to a preorder tree traversal

- Breadth First Search (BFS)

- Similar to a level-order tree traversal

If there’s a path from a vertex to another vertex , we say is reachable from . A search method is to traverse/visit every reachable vertices in the graph.

DFS

Here’s the pseudo code and animation of DFS.

short int visited[MAX_VERTICES];

/*Depth first search of a graph beginning at v.*/

dfs(v){

/*Label vertex v as reached.*/

visited[v] = TRUE;

/*Explore the adjacent unvisited vertices.*/

for (each unreached vertex u adjacent from v)

dfs(u);

}BFS

- Visit start vertex and put into a FIFO queue

- Repeatedly remove a vertex from the queue, visit its unvisited adjacent vertices, put newly visited vertices into the queue

Here’s the pseudo code and animation of BFS.

// From VisuAlgo

BFS(u), Q = {u}

while !Q.empty // Q is a normal queue (FIFO)

for each neighbor v of u = Q.front, Q.pop

if v is unvisited, tree edge, Q.push(v)

else if v is visited, we ignore this edgeTime Complexity

- Adjacency matrix, the time complexity is

- For each node, searching the corresponding row to find adjacent vertices takes

- Visiting at most nodes takes

- Adjacency list, the time complexity is

- Search at most edges and nodes

Application: Articulation Points

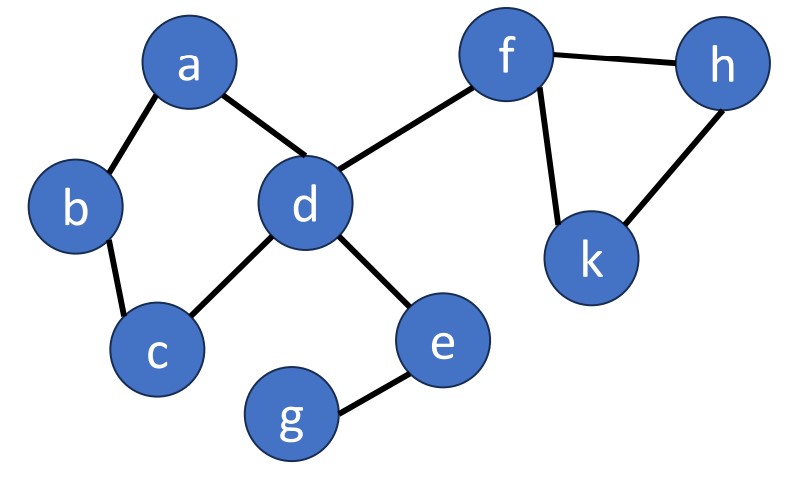

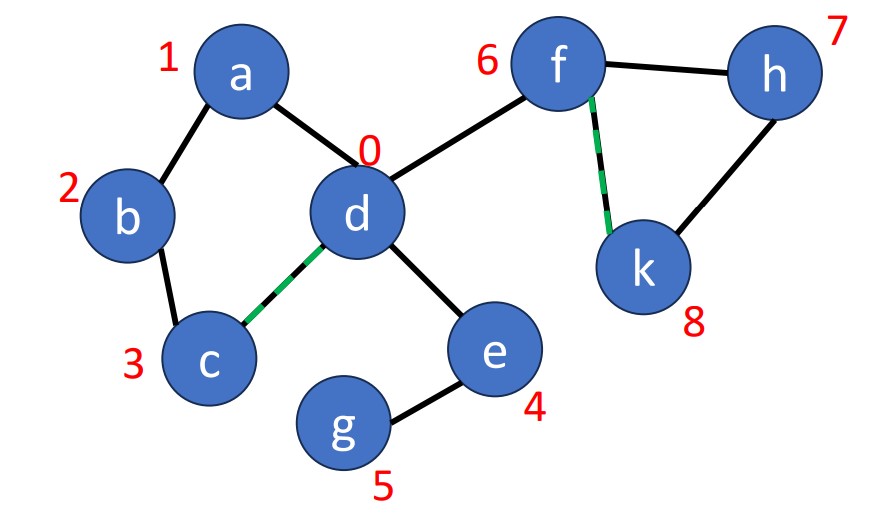

Articulation Point means if a vertex is deleted, at least 2 connected components are produced, then the vertex is called articulation point. In the graph below, and are articulation points, for example.

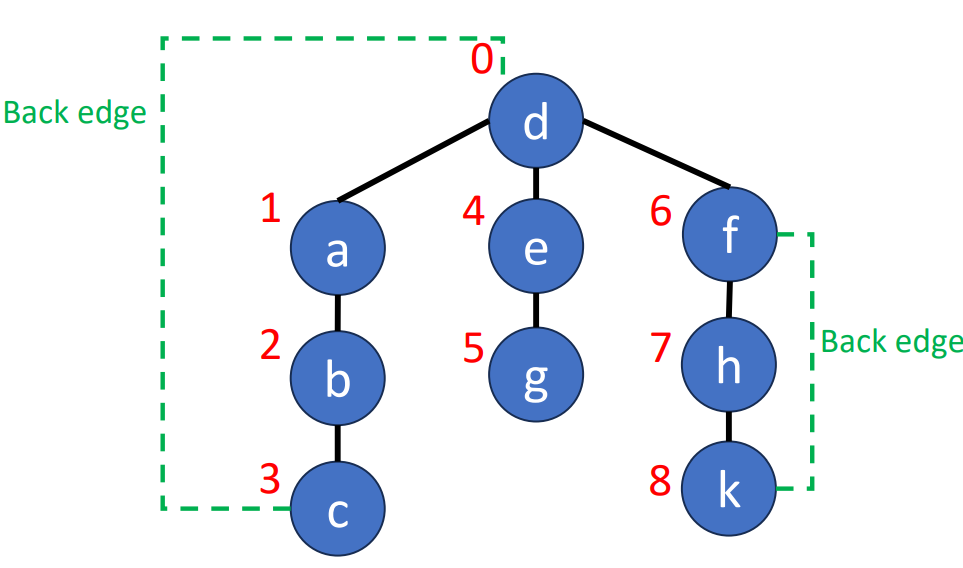

To find a articulation point, we can generate a depth-first search spanning tree. Using the graph above as an example, we use dfs(d) to generate a spanning tree.

- For root

- , then is an articulation point

- For a non-root vertex

- A child of vertex cannot reach any ancestor of vertex via other paths, then is an articulation point

Minimum Spanning Trees (MST)

Intro

- In a weighted connected undirected graph

- is number of vertices

- A spanning tree of the least weights

- Weights/Costs is the sum of the weights of edges in the spanning tree

- Edges within the graph

- Number of edges is

How can we derive an MST from a graph?

Kruskal’s Method

- Start with an forest composed of vertices and edges

- Select edges in nondecreasing order of weight

- If not form a cycle with the edges that are already selected

Pseudo Code

T = {};

while (|T| < N-1 and E is not empty){

choose a least cost edge (v,w) from E;

E = E – {(v,w)}; /*delete edge from E*/

if (adding (v,w) doesn’t create a cycle in T)

T = T + {(v,w)}; /*add edge to T*/

}

if (|T| == N – 1) T is a minimum cost spanning tree.

else There is no spanning tree.Data Structures for Kruskal’s Method

- Operations related to

- Check if the edge set is empty

- Select and remove a least-weight edge

- Use a min heap or leftist heap for edges set

- Time complexity

- Initialization:

- Remove and return least-weight edge:

- Operations related to

- Check if has edges

- Examine if adding to creates a cycle

- Each connected component in is a set containing the vertices, like

- Adding 2 vertices that are already connected creates a cycle

- Using

find()operation to determine if and are in the same set

- Add an edge to

- If an edge is added to , the 2 connected components that have vertices and should be merged

- Using

union()operation to merge the set containing and

- Use disjoint sets to process

Time Complexity

- Operations for edge set

- Initialize min heap or leftist heap:

- Operations to get minimum weight edge:

- At most times of operation:

- Operations for vertices

- Initialize disjoint sets:

- At most find operations and union operations: close to

- Overall:

- For each iteration, time for union-find operation is less than that for obtaining minimum cost edge

Prim’s Method

Prim’s Algorithm is an greedy algorithm.

- Start with a tree composed of vertex

- Grow the tree by repeatedly adding the least weight edge until it has edges

- Only one of and is in

Pseudo Code

// From VisuAlgo

T = {s}

enqueue edges connected to s in PQ (by inc weight)

while (!PQ.isEmpty)

if (vertex v linked with e = PQ.remove ∉ T)

T = T ∪ {v, e}, enqueue edges connected to v

else ignore e

MST = THash Tables

Intro

- A table to store dictionary pairs

- A dictionary pair includes

(key, value) - Different pair has different key

- A dictionary pair includes

- Operations

Search(key)Insert(key, value)Delete(key)

- Expected Time

Example

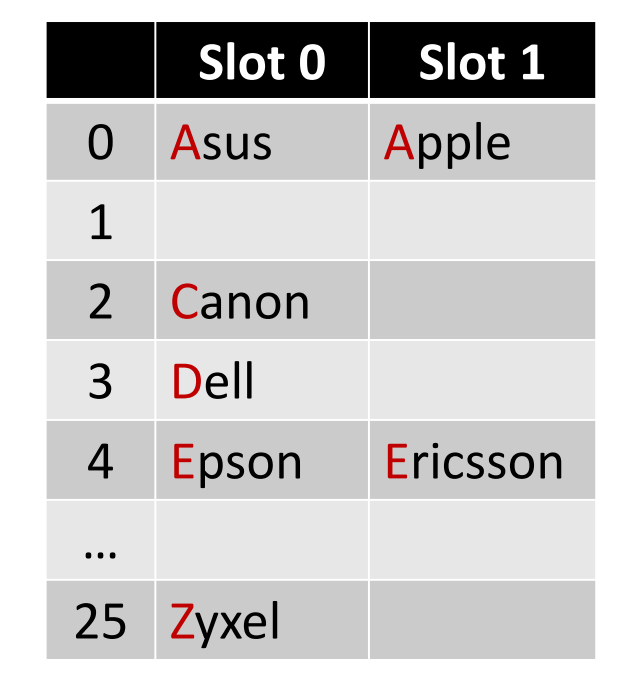

Insert the pairs with the following keys to the hash table. Asus, Canon, Zyxel, Epson, Ericsson, Apple, Dell

- Hash Table

ht- 26 buckets (26 alphabets)

- 2 slots per bucket (like “a” bucket holds 2 pairs, Asus & Apple)

- Hash Function

h(k)- Map the first character of a key from to

Loading Density

- Loading Density

- is number of pairs in the table

- is number of slots per bucket

- is number of buckets

So in the previous example, we have the loading density .

Synonyms

- keys are synonyms if

h(k1)==h(k2)- In the previous example, Asus and Apple are synonyms

- When Inserting Pairs in Previous Example

Insert(Cisco, 1000)- The home bucket isn’t empty

- Collision

Insert(Acer, 1000)- The home bucket is full

- Overflow

- Collision & Overflow occur simultaneously when each bucket has only slot

Ideal Hash Functions

- Easy to compute

- Minimize number of collisions

- No biased use of hash table

h(k)is independently and uniformly at random from to- The probability of a bucket to be selected is

Hash Functions: Division

- Division

- Use the remainder

- Have at least buckets in the hash table

- Example

- Inserting pairs

- Hash table with slots,

ht[0:10] - Hash function:

Hash Functions: Folding

- Partition the key into several parts and add all partitions together

- Shift folding

- and we partition it into digits long, then

- Folding at the boundaries

- Reversing every other partition and then adding

- and we partition it into digits long, then

Overflow Handling

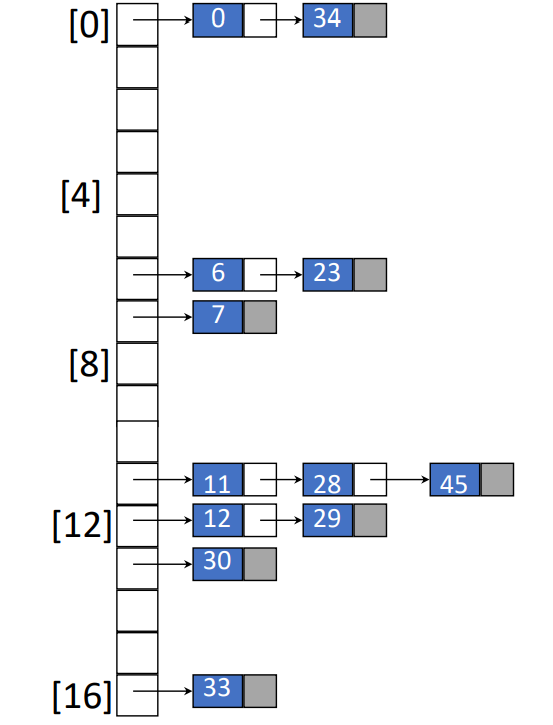

Chaining

Intro

- A linked list per bucket

- Each list contains all synonyms

- Example

- Inserting pairs whose keys are

- Hash function is

- Average chain length is

Expected Performance

If we have a chained hash table with uniform hash functions

- Average chain length is

- is number of data items in hash table

- is the number of buckets (number of chains)

- Expected number of key comparisons in an unsuccessful search

- Expected nubmer of keys on a chain

- Expected number of key comparisons in a successful search

- When the key is being inserted, the expected number of keys in a chain is

- The expected number of comparisons needed to search for is . (Assuming that new entry will be insert)

- Find the key, averaged over

Open Addressing

- Search the hash table in some systematic fashion for a bucket that is not full

- Linear probing (linear open addressing)

- Quadratic probing

- Rehashing

- Random probing

Linear Probing

Search

- Search the hash table in the following order

- is hash table

- is the hash function

- is the number of bucket

Insert

- The insert terminate when we reach the first unfilled bucket

- Insert the pair into taht bucket

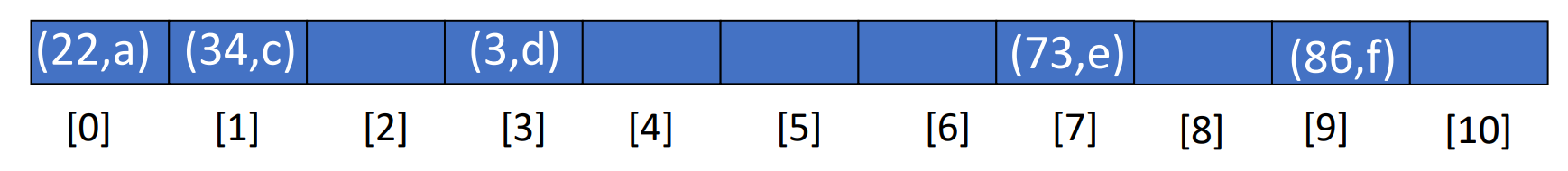

For example, we have a hash function and . Insert pairs whose keys are

| Index | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Value | 34 | 0 | 45 | 6 | 23 | 7 | 28 | 12 | 29 | 11 | 30 | 33 |

Delete

In linear probing, we cannot directly delete an element in the hash table. Since if we do that, the corresponding position will be None, that way, the search function will return when encounter the None, so all the elements after None won’t be visited. To solve it, we use Tombstone to replace the none, we put a Tombstone in the element place we want to delete, and this is call lazy deletion.

Expected Performance

Loading density of a hash table takes

- is number of data item in hash table

- is number of buckets

When is large and

- Expected number of key comparisons in an unsuccessful search

- Expected number of key comparisons in a successful search

is recommended

Proven by Knuth, 1962

Quadratic probing

In quadratic probing, we search elements in the following order. ( is hash function, is number of buckets)

For example, if , where

Rehashing

- Create a series of hash functions

- Examine buckets in the order of

Perfomances

- Worst case for find/insert/delete time is , is the number of pairs in the table

- Open addressing

- This happens when all pairs are in the same cluster

- Chaining

- This happens when all pairs are in the same chain

- Open addressing

Bloom Filters

Intro

- When

- Returning maybe and No are acceptable

- What

- Bit array

- Uniform and independent hash functions

- Limitations

- The naive implementation of the bloom filter doesn’t support the delete operation

- The false positives rate can be reduced but can’t be reduced to zero

Operations

Insert(k)

Given bits of memory and hash functions, then

- Initialize all bits to be

- To insert key , set bits

- So key will make multiple indices changes

Member(k, BF)

Search for key

- ANY means is not in the set

- ALL means may be in the set

Performances

Assume that a bloom filter with

- bits of memory

- uniform hashed functions

- elements

Consider the bit of the bloom filter

- Probability to be selected by the hash function

- Probability of unselected by the hash function

- Probability of unselected by any of hash functions

- After inserting elements, probability of unselected by any of hash functions

- After inserting elements, probability that bit remains

- After inserting elements, probability that bit is

Probability of false positives

- Take a random element and check

- The probability that all bits in are

Design of Bloom Filters

- Choose (filter size in bits)

- Large to reduce filter error

- Pick (number of hash functions)

- is too small

- Probability of different keys having same signature is high

- Test more bits for

- Lower false positive rate

- More bits in are

- Higher false positive rate

- is too large

- The bloom filter fills with ones quickly

- Test less bits for

- Higher false positive rate

- More bits in are

- Lower false positive rate

- is too small

- Select hash functions

- Hash functions should be relatively independent

Given bits of memory and elements, choose

- Probability that some bit is

- Expected distribution

- bits are and vice versa

- Probability of false positives

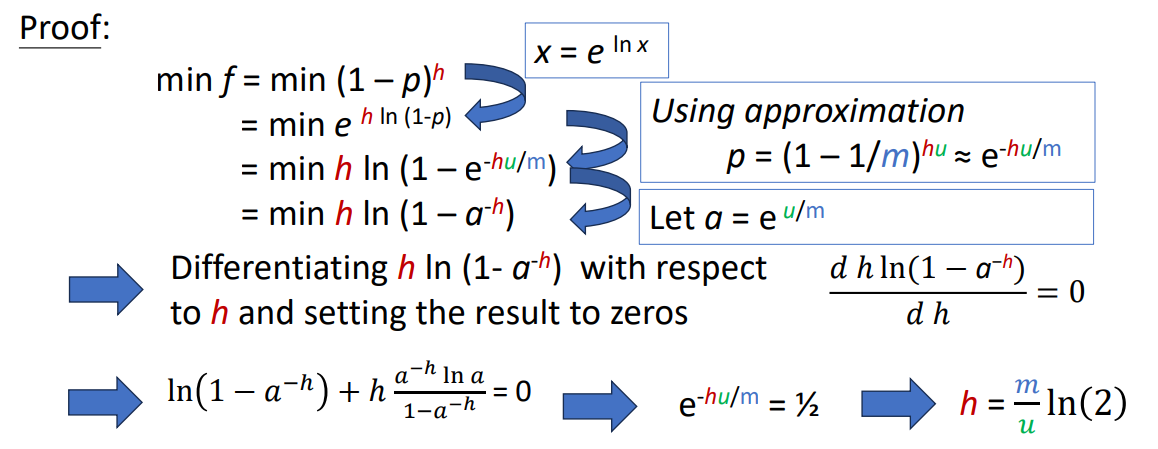

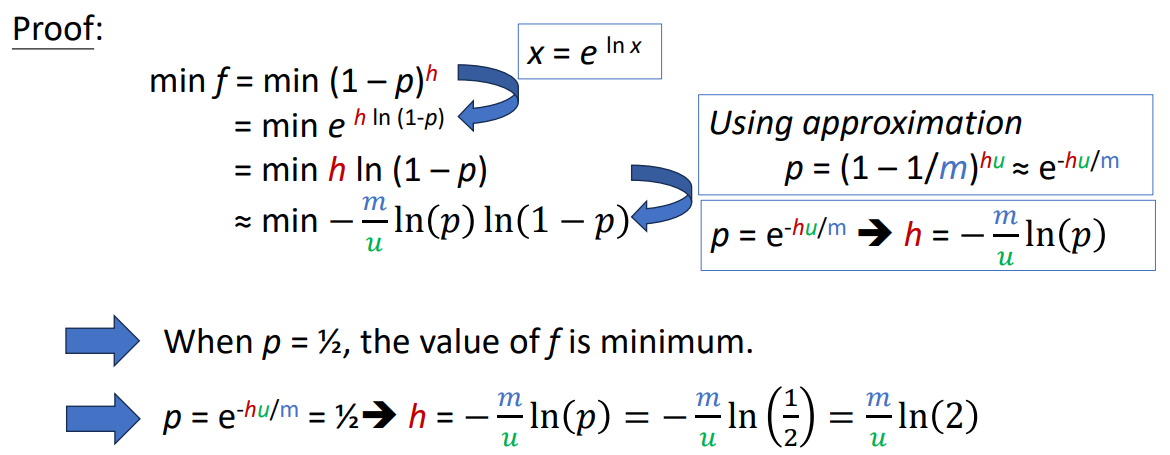

Minimize The False Positive Rate

Assume that the filter size and the number of elements in the filter are fixed, minimizes false positive rate if

Proof 1

Proof 2

Binomial Heaps

Leftist vs Binomial Heaps

This is a table of time complexity for different operations.

| Operations | Leftist Heaps | Actual Binomial Heaps | Amortized Binomial Heaps |

|---|---|---|---|

| Insert | |||

| Delete min (or max) | |||

| Meld |